Intsurfing builds scalable, high-performance ETL solutions to complex data needs. With over 210 projects delivered, our expertise spans data extraction, transformation, loading, and optimization for cloud environments.

We offer two flexible collaboration models: outsourcing for businesses looking for a fully managed ETL solution and managed teams for those who need a dedicated group of experts working as an extension of their in-house staff. Learn more about our collaboration models here.

In this article, we’ll explain why Intsurfing is the best ETL service provider. You’ll learn about:

- How we handle an ETL pipeline development from scratch

- Things we do to optimize existing ETL systems

- What tools, frameworks, and programming languages we use

Let’s dive in.

Building an ETL Pipeline Project from Scratch with Intsurfing

Building an ETL pipeline from the ground up requires expertise at every stage—data ingestion, transformation, orchestration, and deployment. The question is: how involved do you want to be?

With Intsurfing, you have two options:

- Need a fully managed solution? We take care of everything from architecture to deployment, so you don’t have to.

- Want to stay involved? Our managed team model lets you collaborate with our experts while keeping strategic control.

At Intsurfing, we design ETL pipelines that match your exact needs—whether that means integrating with a legacy system, pulling from scattered data sources, or handling complex transformations.

We work with cloud infrastructures (mainly AWS, but we also have experience with GCP and Azure) and support a wide range of databases and APIs. Need real-time data streaming, advanced transformations, or AI-powered data parsing? We design event-driven, batch, or hybrid ETL pipelines that align with your business logic.

On top of that, we build for the long run. Our ETL development company:

- Monitors performance and optimizes processing time.

- Adjusts workflows as your data volume grows.

- Refines cloud resource usage to keep costs under control.

Proven Success: India Voter List ETL Project

When Apriori Data approached us, they wanted to compile a voter ID list from India’s electoral authorities. This meant gathering data from every state and administrative division, cleaning and standardizing it, transliterating it into Roman characters, and cross-referencing it with information from India Post. The ultimate goal was to create a unified file that could be updated annually.

What was particularly interesting about India Voter List Project?

- Processed over one billion records

- Pulled data from 36 sources

- 22 official languages

- Many records were handwritten in the native language

- Combined linguistic expertise and machine learning algorithms

- OCR tools didn’t recognize Punjabi

We built a centralized digital voter ID database containing over one billion records. The data was available in both native languages and transliterated English, encompassing 63 fully verified and normalized data fields, including voter names, addresses, and polling station information.

Performance Optimization of Existing ETL Systems

ETL pipelines slow down over time. More data. More complexity. More headaches.

At Intsurfing, we optimize the performance of cloud data pipelines by using Scala, Java, C#, .NET, and Apache Spark.

Integration? No problem. Our ETL solutions connect with SQL, NoSQL, cloud storage, and third-party APIs. We build pipelines that pull, transform, and load data exactly where you need it, whether that’s a data warehouse, BI tool, or real-time analytics dashboard.

Migrating to a new platform? That can get messy fast. We make it structured and predictable—handling compatibility checks, performance tuning, and risk mitigation so you don’t have to.

Client Success Story: Address Parsing System Revamp

A prime example of our optimization expertise is our work with a client facing challenges in processing large volumes of address data. Their existing system, built on MS SQL Server and SSIS, struggled with high traffic and large datasets.

We reworked their architecture. Switched to Redis for caching. Optimized algorithms. Built a deduplication system that cut redundant processing.

These ETL project improvements resulted in a 50% increase in processing speed and reduced traffic load.

Intsurfing ETL Company Technical Expertise and Technology Stack

We work with Apache Spark, Airflow, AWS Glue, Databricks, Kafka, and Flink to handle both batch and real-time data processing. Our team knows how to set up, optimize, and scale workflows to handle any data challenge.

As an ETL vendor, we build and optimize pipelines for AWS, Google Cloud, and Azure.

- AWS: Leveraging AWS Glue, we create serverless ETL processes that facilitate data discovery, cataloging, and transformation. This approach allows for scalable and cost-effective data integration.

- Google Cloud: We utilize Google Cloud Dataflow and BigQuery to handle both batch and stream data processing. This enables real-time analytics and insights, supporting complex data transformations and integrations within the Google Cloud ecosystem.

- Azure: Our team employs Azure Data Factory to orchestrate data movement and transformation across various sources. This service provides a robust platform for creating, scheduling, and managing data pipelines, ensuring reliable data flow within your Azure infrastructure.

Our team is proficient in Scala, Python, C#, Java, and .NET. This strong programming foundation allows us to address complex data challenges and deliver solutions that align with your business objectives.

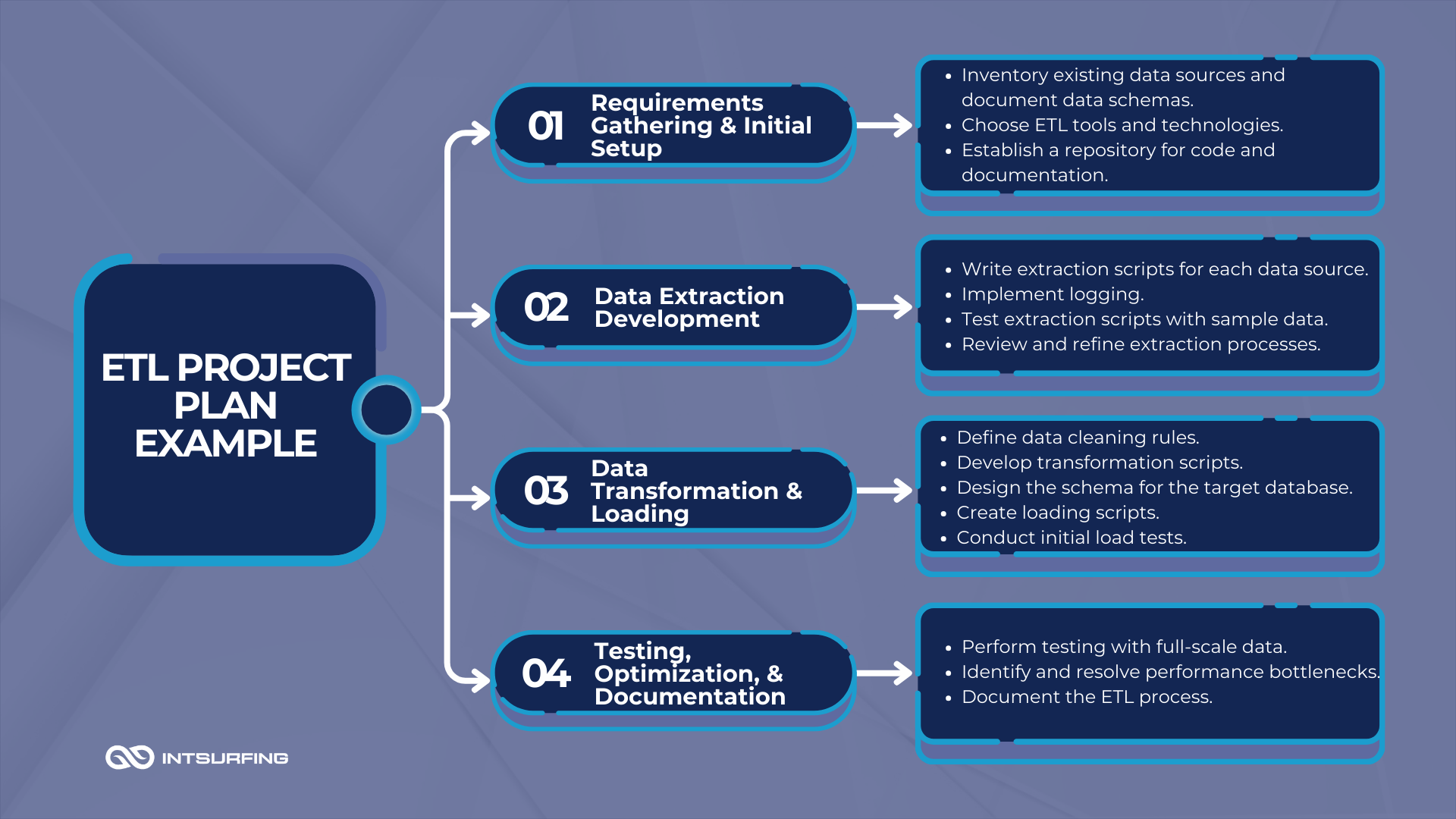

Our ETL Project Development Life Cycle

At Intsurfing, we follow a structured approach to make sure every ETL project is optimized and yields results. Here’s how we do it:

- Discovery & Requirements Analysis. What data do you work with? How much of it? What transformations are required? We map out the entire data flow, identifying potential bottlenecks, security risks, and compliance requirements.

- Solution Architecture & Tech Stack Selection. We select the right combination of Apache Spark, Airflow, AWS Glue, Databricks, or Flink, depending on scalability, latency, and integration needs. We also define the cloud strategy, whether it’s AWS, Google Cloud, or Azure, and determine the best data storage options—from data lakes to enterprise-grade warehouses like Redshift or BigQuery.

- Pipeline Development & Customization. This is where we build. Our engineers develop the entire ETL project architecture. Custom connectors, automation scripts, and AI-driven data transformations are all built to fit your exact business logic.

- Testing & Performance Optimization. We stress-test the system with large datasets, identifying weaknesses before they impact operations. We fine-tune performance using auto-scaling, caching, and parallel processing to handle high-throughput workloads without delays.

- Deployment & Ongoing Support. We integrate the pipeline into your CI/CD workflows, setting up monitoring tools to track performance. Our team provides ongoing support and ETL project management, handling maintenance, troubleshooting, and scaling as your data volumes grow.

Conclusion

Choosing Intsurfing as your ETL partner means you’re investing in a team that cares about your data as much as you do.

Contact us today, and let’s discuss what we can do to build or revamp your ETL flow.