Back in the early 2000s, Martin Odersky—who had already contributed to Java’s generics—wanted to create something to address the shortcomings of Java. So he built Scala.

Around the mid-2010s, it surged in popularity—thanks in large part to Apache Spark, which chose Scala as its native language. Twitter adopted it. So did LinkedIn. Startups loved it for its conciseness. Enterprises liked that it ran on the JVM. It became the language of choice for scalable systems, data engineering, and backend services.

But today, just 2.6% of developers say they’ve worked extensively with Scala in the past year, according to Stack Overflow.

Nevertheless, the Scala programming ecosystem has matured. Its core features have stabilized. Its tooling has caught up. And despite its lower profile, it’s still widely used in big data environments.

So, what exactly is Scala? Why does it keep showing up in large-scale data projects? And is it still worth your attention? That’s what we’re diving into.

What Is Scala Programming Language?

Scala is a general-purpose programming language that combines object-oriented and functional programming. This means you can use classes, objects, and inheritance when it makes sense—or go fully functional with immutability, pure functions, and higher-order constructs.

So, why was it created?

Martin Odersky developed the Scala language to fix Java shortcomings—verbosity, lack of modern abstractions, and weak support for functional patterns. Scala allows developers to write expressive, concise, and scalable code without giving up performance or compatibility with the broader Java ecosystem.

Here’s what makes Scala stand out:

- First-class functions and immutable collections

- Pattern matching for concise control structures

- Advanced type system with type inference, generics, and variance

- Traits for modular and reusable code

- Built-in support for asynchronous and concurrent programming

- Direct access to all Java classes and libraries

In short, Scala gives you the flexibility of a scripting language and the robustness of a compiled one—ideal for everything from quick prototyping to building large-scale distributed systems.

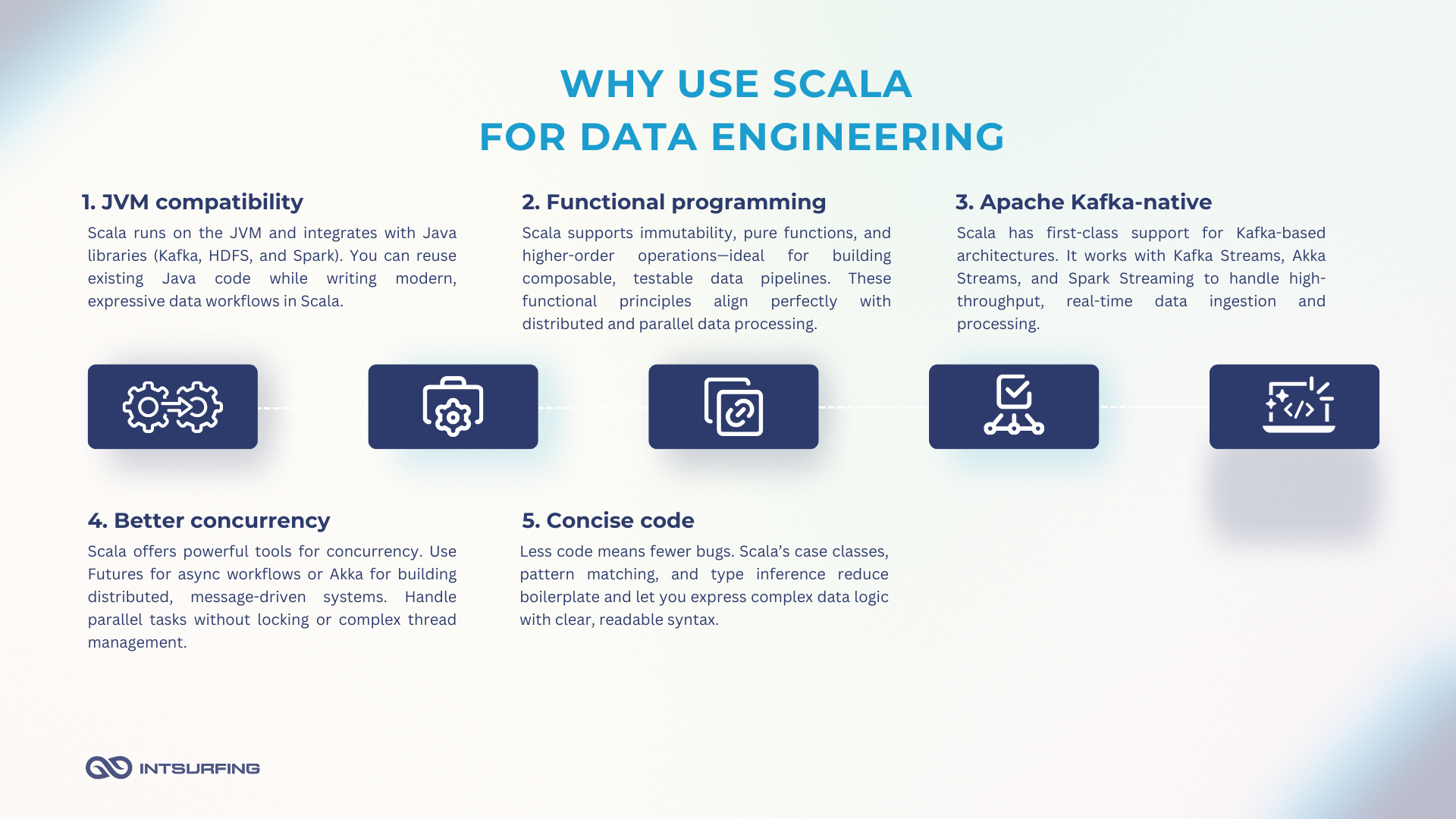

Why Use Scala for Big Data?

When you’re dealing with massive datasets, complex transformations, and distributed environments, the tools you choose matter. You need something that can handle scale, support clean and reliable code, and integrate well with the rest of your stack. Scala is built for that. So, why use Scala for big data?

JVM Compatibility

Scala is fully interoperable with Java. That means you can reuse any Java library or framework — including those you already rely on in production — while writing modern, expressive code in Scala.

The JVM ecosystem gives you access to:

- Apache Kafka

- Hadoop and HDFS

- Cassandra, HBase, Elasticsearch

- Log4j / SLF4J

- Spring, Guice

Scala can call Java code directly, and vice versa. That’s critical when you’re working in an enterprise setting where many components—Kafka clients, JDBC drivers, Hadoop APIs, or custom internal libraries—are written in Java.

Functional Programming for Data Workflows

One of the biggest reasons Scala fits naturally in data engineering is its strong support for functional programming (FP). FP encourages a declarative style where you define what should be done, not how. This leads to predictable, composable code — ideal for transforming, aggregating, and filtering data.

In contrast to imperative code, where you mutate state step by step, functional Scala promotes immutability and pure functions — making data pipelines easier to test, debug, and parallelize.

case class Order(id: Int, amount: Double, status: String)

val rawOrders = List(

Order(1, 100.0, "processed"),

Order(2, 200.5, "failed"),

Order(3, 150.0, "processed")

)

val totalProcessed = rawOrders

.filter(_.status == "processed") // filter stage

.map(_.amount) // transform stage

.reduce(_ + _) // aggregation stage

Here’s what makes this pipeline functional:

- No variables are mutated.

- Every step returns a new collection.

- Each transformation is a pure function — it depends only on input, produces no side effects.

This is easy to reason about, and it scales well — Spark, for instance, uses the same principles.

double total = 0.0;

for (Order o : orders) {

if (o.getStatus().equals("processed")) {

total += o.getAmount();

}

}

In functional Scala, logic becomes modular and chainable. That’s powerful when your pipeline grows. You can compose steps, abstract logic, and build reusable functions.

Let’s say you want to apply currency conversion and tax adjustments — with FP, you just compose transformations:

Scala:

val convertToEUR: Double => Double = _ * 0.92

val applyTax: Double => Double = _ * 1.2

val adjustedTotal = rawOrders

.filter(_.status == "processed")

.map(_.amount)

.map(convertToEUR andThen applyTax)

.reduce(_ + _)

All of this translates naturally to distributed systems, where filter, map, and reduce transformations are executed across clusters — thanks to Scala’s functional roots.

First-Class Support for Apache Spark

Apache Spark is written in Scala. That alone gives Scala a deep, native integration advantage. If you’re building data pipelines with Spark, Scala gives you full access to the engine’s core capabilities, low-level APIs, and all the latest features, namely:

- Custom partitioners and RDD lineage control

- Efficient serialization using case classes + Encoders

- Direct use of Spark internals (e.g.

mapPartitions, broadcast, accumulators, metrics)

Python (PySpark) or Java are also there, but they work through wrappers or secondary bindings.

Better Concurrency

Scala gives you advanced, yet intuitive, concurrency tools through two core features: Futures and Akka.

Future in Scala is a standard library abstraction for asynchronous computation. It runs tasks concurrently without blocking threads. That’s crucial when you’re building data services, API endpoints, or I/O-heavy ETL components.

Akka is a toolkit for building highly concurrent, distributed, and fault-tolerant systems using the actor model. In Akka:

- Each actor is a lightweight unit of computation.

- Actors process messages one at a time, making state management safe.

- You can spawn millions of actors, each representing a stream, task, sensor, or user.

This model is ideal for building data ingestion systems, streaming applications, or job schedulers.

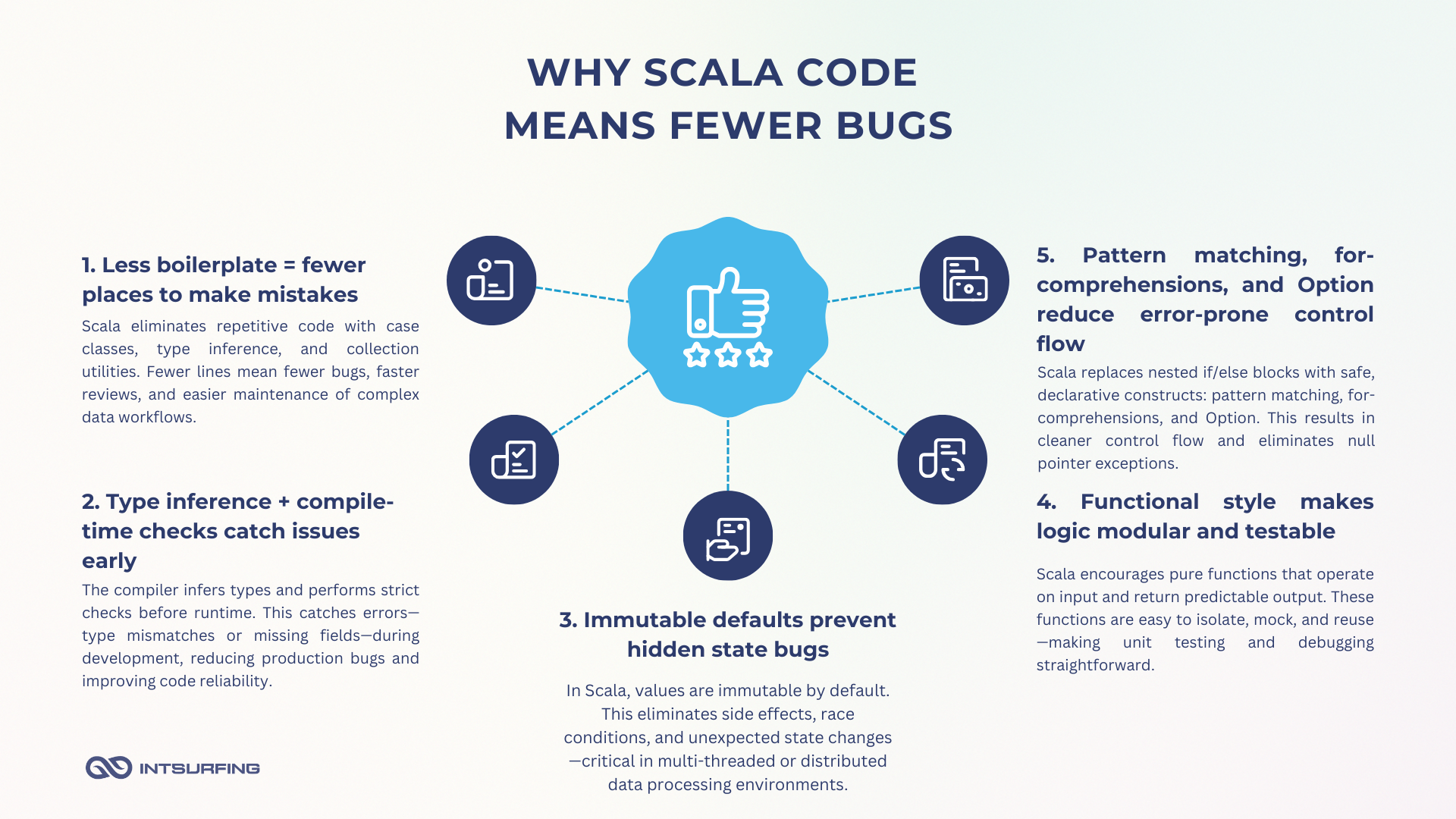

Concise Code, Fewer Bugs

The less code you write, the less you have to debug, maintain, or refactor. Scala gives you a powerful type system, modern syntax, and functional constructs — all designed to reduce boilerplate and make logic easier to follow.

So, you ship cleaner data pipelines faster — with code that’s easier to test, extend, and reason about.

Less Code to Say More

Scala lets you write less code without losing clarity — in fact, it often improves it. Data transformations, conditional logic, and aggregations become more readable because the language is designed to express intent directly.

You’re not caught up in managing state, handling edge cases with verbose control structures, or repeating patterns over and over. Instead, Scala encourages a declarative style where you describe what you want to happen, not how to do it step by step.

That’s a huge advantage when building pipelines that need to stay maintainable over time. You focus on business logic, while the structure of the language helps eliminate boilerplate. So, you get code that’s shorter, easier to reason about, and less prone to bugs — especially when the system grows or gets more complex.

Strong Static Typing + Type Inference

Scala’s static type system helps you catch bugs before runtime — like missing fields, null references, or invalid transformations. At the same time, type inference keeps the syntax clean.

You don’t need to declare everything explicitly.

Scala:

val orders: List[Order] = ...

val total = orders.map(_.amount).sum

Safer Defaults: Immutability and Pure Functions

Scala encourages immutable data and pure functions — the foundation of predictable, side-effect-free code. That’s critical in data pipelines where mutable state leads to race conditions, hidden bugs, or incorrect results.

Scala:

def normalize(amount: Double): Double = amount / 100

Always returns the same output for the same input. No surprises. Now apply that idea across your whole pipeline — you get safer systems by default.

Expressive Tools for Common Patterns

Scala turns patterns you hit daily — transformations, filtering, optional values, or fallbacks — into one-liners you can trust.

Optional value? Use Option:

Scala:

val maybeUser: Option[User] = findUser("id-123")

val name = maybeUser.map(_.name).getOrElse("unknown")

No nulls, no NullPointerException, and no verbose if (user != null) blocks.

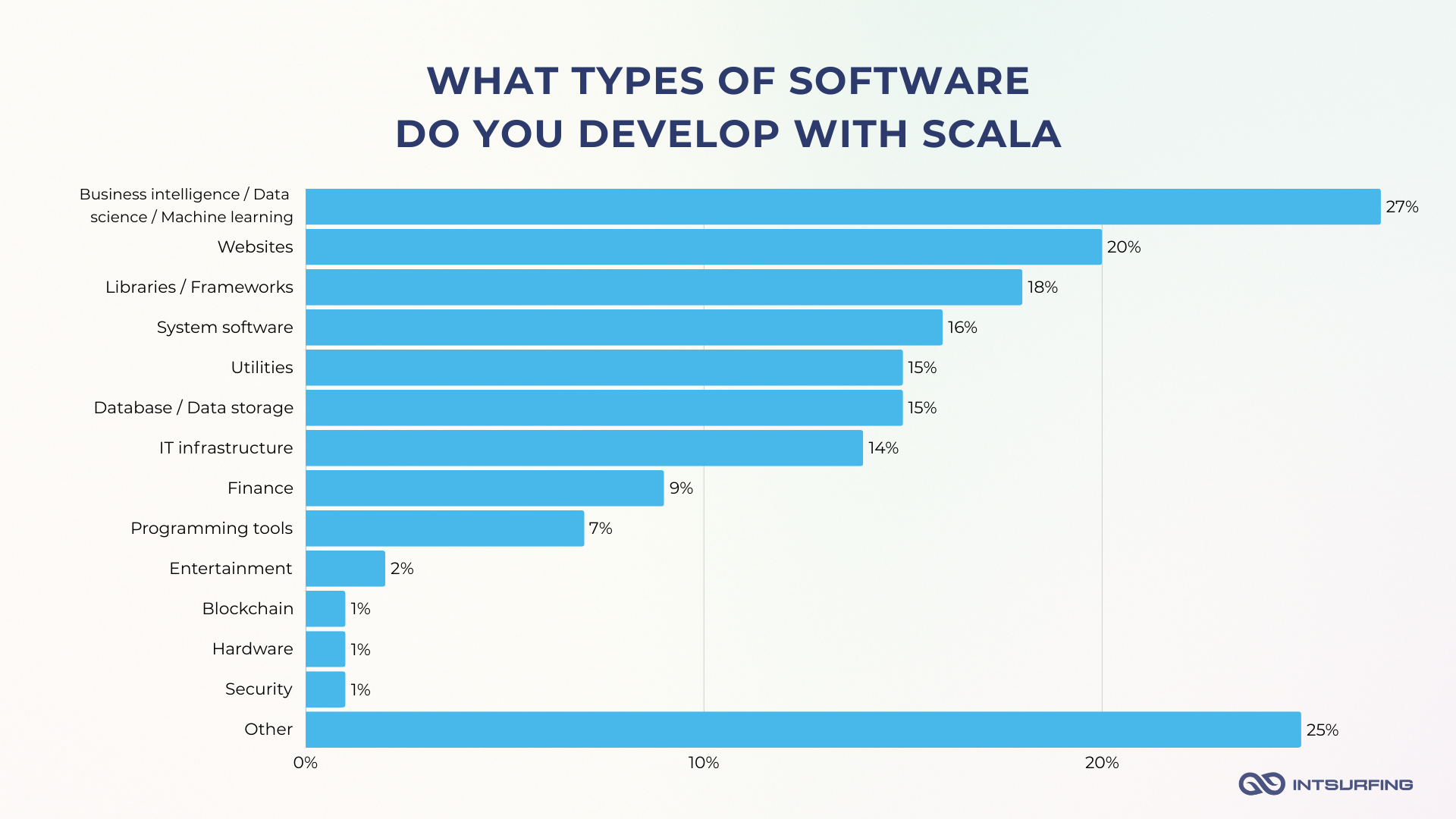

What is Scala Programming Language Used for in Big Data?

Scala is a core part of many big data tools and pipelines. According to JetBrains’ Developer Ecosystem report, 27% of Scala developers use it to build business intelligence, data science, or machine learning products. That’s more than those using it for websites (20%) or libraries and frameworks (18%).

And it makes sense. Scala’s design lines up perfectly with the demands of big data systems. It handles scale without turning code into a mess. It lets you express complex logic with fewer moving parts. And it uses JVM.

In the next section, we’ll break down what is Scala programming used for in data engineering.

Building Data Pipelines with Apache Spark

We’ve already talked about Spark and Scala for big data analytics and other purposes. If you write your Spark jobs in Scala, you get:

- First access to all new APIs

- Strong compile-time type safety

- Better performance than PySpark (fewer serialization overheads)

So, in fact, Scala gives you native access to Spark’s full capabilities.

Streaming Data Processing

Scala is heavily used in real-time systems. With Spark Structured Streaming, Apache Flink, and Akka Streams, you can process data continuously from Kafka, IoT devices, or user events.

Because Scala supports non-blocking, event-driven programming out of the box (via Futures and Akka), it’s a great fit for building fast, reactive streaming pipelines.

Working with Distributed Systems

Scala is a strong choice when you’re working with distributed systems — and that’s pretty much every big data setup today. You’ve got data coming in from dozens of sources, flowing across clusters, written and read by different services, often in real time. Scala helps you handle that complexity.

We’ve seen this play out in practice. We worked on a Scala project where Spark jobs were crashing due to massive data skew and poor indexing. Their search engine was slow, data was poorly partitioned, and performance just wasn’t acceptable. We used Scala to rebuild key parts of their data processing layer. We applied better indexing and smarter partitioning — also written in Scala — and cut down search latency from multiple seconds to near real time. That project alone reduced processing time by over 50%.

So if you’re working with distributed components — ingestion pipelines, streaming apps, data APIs, or massive storage backends — Scala gives you the right tools to handle that without losing your mind. It’s built for this.

Creating Microservices and Data APIs

Big data platforms usually need APIs to expose processed data to other teams or applications. Scala, especially when used with Akka HTTP, Play Framework, or http4s, is great for building:

- Lightweight microservices

- Data-serving REST APIs

- Async, backpressure-aware endpoints

You can integrate these services directly into your data platform or expose them externally.

Developing Internal Data Tools and Utilities

Many companies use Scala to build internal tools — custom job runners, schema validators, data profilers, or monitoring tools. Thanks to its concise syntax and strong typing, Scala is well-suited for writing CLI utilities or internal dashboards that operate on big data.

For instance, Amazon built an internal tool called Deequ using Scala. It’s a data quality library that runs on Spark and lets teams define checks, compute metrics, and catch issues before bad data hits downstream systems. What’s great is that it works at scale — we’re talking billions of records — and thanks to Scala’s type system and functional style, the whole thing stays readable and easy to extend.

Afterword

If you’re building large-scale data systems, working with Spark, or designing distributed architectures that need to be reliable and maintainable — Scala is a solid choice. It’s especially useful if you want strong typing, functional programming, and full access to the Java ecosystem. Teams that care about clean code, performance, and long-term scalability will benefit from using Scala. It shines when you’re building custom ETL pipelines, streaming apps, or internal data platforms where control, testability, and expressive logic matter. If your team is already familiar with Java or functional concepts, picking up Scala will feel like a natural next step.

But Scala isn’t for everyone. If you’re prototyping something quick and disposable, or if your team lacks experience with statically typed or functional languages, Scala might slow you down. It has a learning curve, and some parts of the ecosystem can feel complex to newcomers. For simple data tasks, scripting, or small teams that prioritize speed over structure, something like Python might be a better fit. But when you’re building systems that need to scale and survive, Scala pays off.