A data pipeline is a system that moves data from one place to another while transforming it along the way.

Here’s how it works.

First, the first step of the pipeline is to collect data. It could come from databases, APIs, or IoT devices. Then, it’s processed—this might involve filtering, aggregating, or transforming the data into a specific format. Finally, the cleaned and structured data is delivered to its destination (a data warehouse, for example), where it can be analyzed.

In a nutshell, a data pipeline automates the flow of information, ensuring that data moves freely from its source to its final destination.

Let’s take a closer look at the components, types, and examples of implementing one.

Exploring the Data Pipeline Architecture

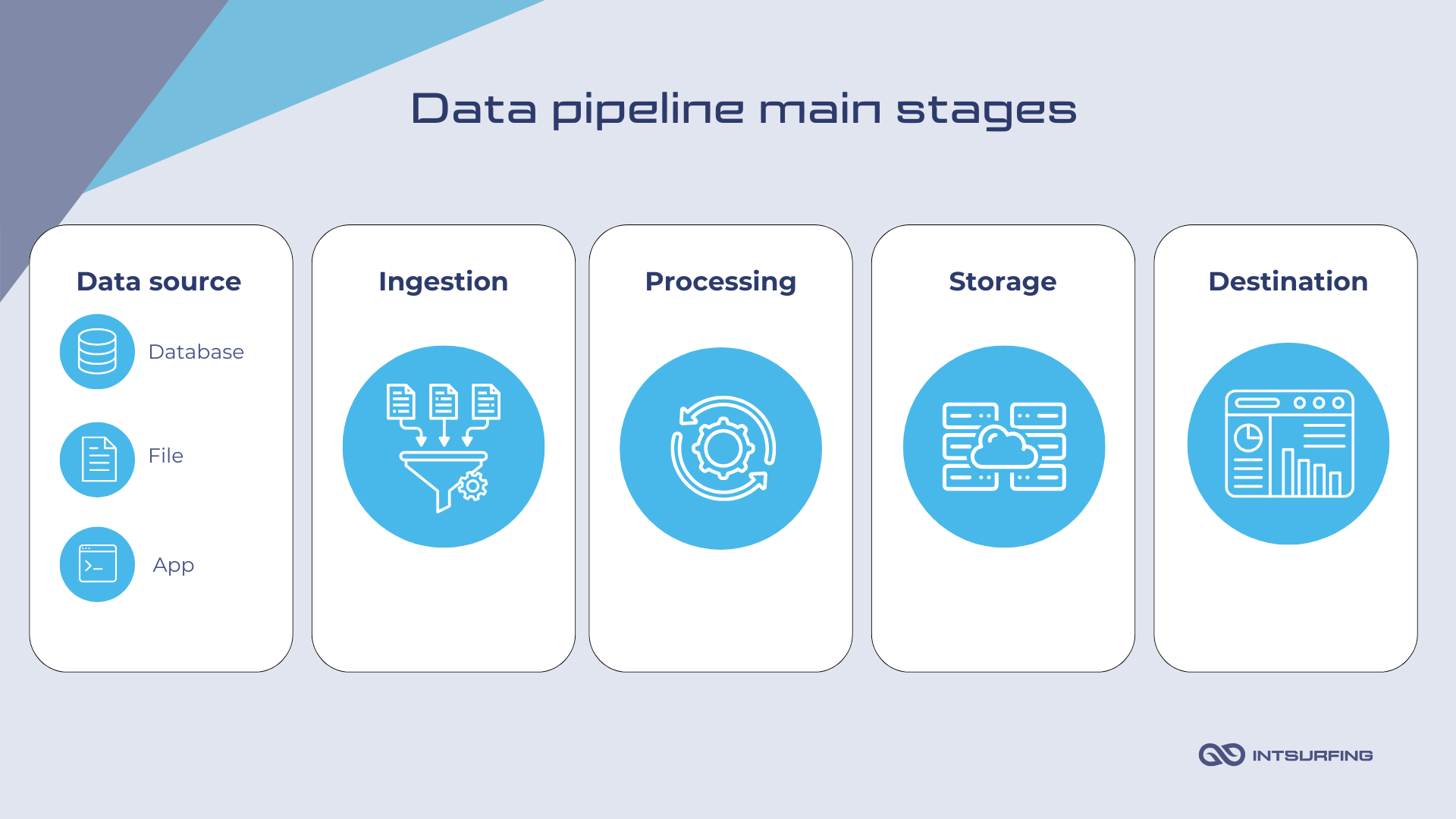

In a data pipeline, data goes through several stages of extraction, processing, and transformation. The number of steps involved depends on what the final system needs. However, the major ones are the following:

Sometimes, the process has just a single step. Other times, it requires multiple transformations and processes to get the data ready for its end use.

Let’s take a look at each of the basic components of a data processing pipeline.

Data Source

Data sources provide the raw data that enters the pipeline. These sources might be:

- Structured data: databases (SQL, NoSQL), data warehouses

- Unstructured data: files (JSON, XML, CSV), logs, streaming data (Kafka, IoT sensors)

- APIs and external sources: Web APIs, third-party services (CRM or social media platforms)

- Cloud storage: S3, Google Cloud Storage, Azure Blob Storage

Multiple sources can feed into the pipeline at the same time, and each source usually has its format and structure.

Ingestion

At this layer, data is pulled from the source and becomes available for processing. The ingestion could be batch-based, where data is pulled at scheduled intervals. Or real-time, where data streams into the pipeline continuously.

Technologies used at this stage:

- Batch ingestion: Apache Sqoop, AWS Glue, Azure Data Factory

- Real-time ingestion: Apache Kafka, Google Pub/Sub, AWS Kinesis

Processing

Here, raw data is cleaned, transformed, and prepared for its final destination. This might include filtering out errors, duplicating records, handling missing data, or converting the data into a standardized format. It can also involve more complex operations—joining multiple data sets, aggregating data for insights, or applying business rules.

Data pipeline tools examples:

- Batch processing: Apache Spark, Hadoop MapReduce, AWS EMR, Azure HDInsight

- Real-time processing: Apache Flink, Google Dataflow, Apache Storm

Storage

Depending on the pipeline’s purpose, data can be stored in a data warehouse (Snowflake, Amazon Redshift, or Google BigQuery) for long-term analysis or in cloud storage for scalability (AWS S3 or Google Cloud Storage).

Destination

Here, the processed data is sent for use. This could be an analytics platform, a reporting tool, or a dashboard (Tableau or Power BI). Also, the data might feed into machine learning models or other applications.

How the Components of Data Process Pipelines Are Connected

Here’s a simplified flow of how the components connect and interact:

- Data Source → (through connectors/APIs) → Data Ingestion: Extraction of raw data from a source.

- Data Ingestion → (streaming or batch transfer) → Data Processing: The raw data is transferred to processing tools for cleaning, filtering, or enrichment.

- Data Processing → (write to) → Data Storage: The data is moved to a storage layer for further use.

- Orchestration → (manages) → Ingestion, Processing, and Storage: Orchestration tools ensure these stages occur in the right sequence and trigger processes automatically based on workflows.

- Monitoring → (observes all stages) → Pipeline Health Check: Monitoring tools keep track of each step, providing alerts in case of performance issues or errors.

Let’s say you are using AWS to build a pipeline. The flow will be the following.

- Data Source: Data is stored in an S3 bucket.

- Data Ingestion: AWS Glue is used to extract data from S3 and load it into an Apache Spark cluster for processing.

- Data Processing: Apache Spark processes the data by cleaning and aggregating it. After processing, the data is sent to Amazon Redshift (data warehouse).

- Data Storage: The processed data is stored in Amazon Redshift, where it can be queried for business insights.

- Orchestration: AWS Step Functions or Apache Airflow orchestrate the whole process, ensuring that AWS Glue starts the ingestion, Spark processes it, and Redshift receives the final data.

- Monitoring: AWS CloudWatch monitors each step (Glue, Spark, Redshift) and provides alerts if any failures or delays occur.

Data Pipeline vs ETL: Main Differences

It’s common to hear the terms “data pipeline” and “ETL” used interchangeably, but they are not the same. While both refer to processes that move data between systems, they differ in scope and purpose.

A data pipeline is a broader term that refers to any system that moves data from one place to another. This can involve extracting data, processing it, and sending it to its destination. However, data pipelines aren’t restricted to ETL processes—they can also manage real-time data streaming, feed machine learning workflows, or move data between systems.

An ETL data pipeline, specifically, focuses on three core tasks: Extracting data, Transforming it into a required format, and Loading it into a destination. ETL is a subset of data pipelines but is generally batch-oriented, so it’s ideal for structured data processing.

We’ll break down the key differences between these two approaches below.

| Aspect | Data pipeline | ETL pipeline |

|---|---|---|

| Scope | Broad. Covers any process that moves data, whether for batch processing, real-time streaming, or other workflows. | Narrower. Focused on extracting, transforming, and loading data in a structured, batch-oriented process. |

| Real-time vs. Batch Processing | Can handle both real-time (streaming) and batch processing. Supports flexible data workflows, like ML models or dashboards. | Typically batch-based, moving data in scheduled intervals (e.g., hourly, daily, monthly). |

| Transformation | May or may not include transformation. For example, in real-time streaming, data may simply be passed through. | Always involves transformation. Data is cleaned, aggregated, or enhanced before being loaded. |

| Loading | Varies. Data can be loaded into databases, message queues, machine learning models, or real-time dashboards. | Primarily focuses on loading data into a data warehouse or structured storage for analysis and reporting. |

| Use Cases | Supports a variety of processes: feeding real-time dashboards, syncing databases, or sending data to ML models. | Typically used in data warehousing to structure data for analysis, insights, and reporting. |

| Business Value | Offers flexibility for real-time and batch data flows, allowing businesses to act quickly on live data (e.g., fraud detection). | Organizes data into a structured format for any business purposes. |

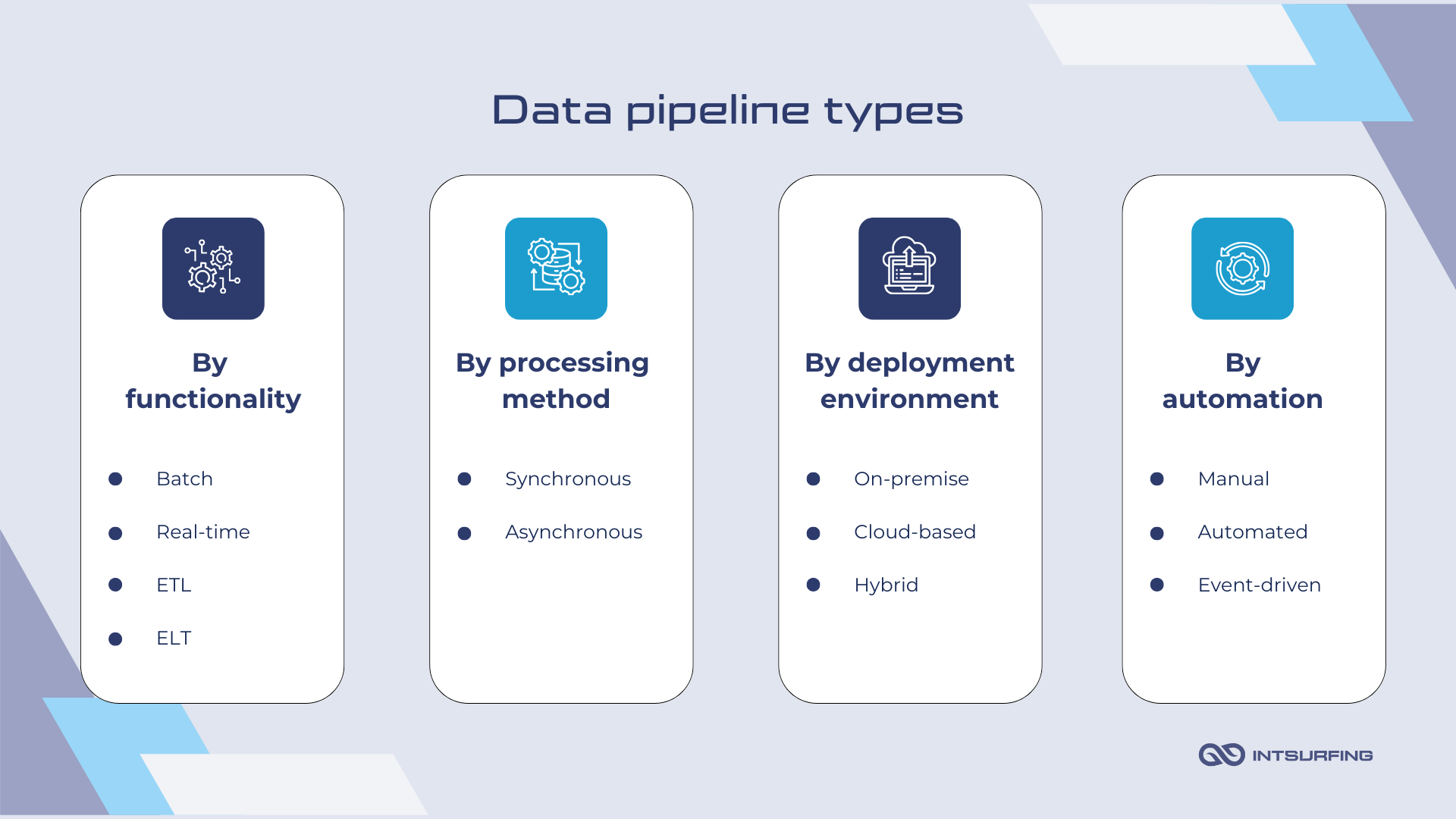

Types of Big Data Pipelines

Data pipelines can be classified in several ways based on how they operate and the environments they use. That’s how we approach differentiating between the types of data pipelines:

Let’s take a closer look at each of the categories.

By Functionality

Batch Pipelines

They collect and process data in large groups or “batches” at scheduled intervals. This approach works well when real-time data isn’t required, and it allows for handling significant volumes of data all at once. For example, businesses may use batch processing to run reports, update databases, or process payroll.

While it’s not ideal for real-time insights, it’s highly efficient for tasks where time sensitivity isn’t a priority. This type of pipeline is best used in situations where data accumulates over time and can be processed in a single go.

Real-Time Data Pipeline

These pipelines continuously process and deliver data as soon as it’s available. This type is key for scenarios that demand immediate updates: real-time dashboards, fraud detection, or monitoring systems.

With real-time pipelines, data flows continuously from the source to the destination, allowing businesses to act quickly on live data. This approach is ideal for environments where decisions need to be made instantly based on fresh data, like in financial services or e-commerce.

ETL Pipelines

ETL (Extract, Transform, Load) pipelines focus on extracting data, transforming it to fit a structured format, and then loading it into a data warehouse or database. ETL pipelines are commonly used in batch processes, where the goal is to prepare large sets of data for analysis or reporting. They are perfect for organizations that need clean, reliable data for decision-making.

ELT Pipelines

ELT (Extract, Load, Transform) pipelines follow a slightly different approach. Here, data is first loaded into the destination before the transformation happens.

This is useful when you want to quickly move large amounts of raw data and then process it later. ELT pipelines offer more flexibility, especially when dealing with unstructured data or when the transformation needs to be delayed or customized based on evolving requirements.

By Processing Method

Synchronous

These pipelines process data in a sequence, where each step depends on the completion of the previous one. This means the data flows in a strict, step-by-step manner. For example, data is first extracted, then transformed, and only after that loaded into its destination.

Synchronous pipelines provide more control and predictability, sure. However, they can introduce latency, as the entire process waits for each step to finish. This makes them ideal for tasks where accuracy and completeness are more important than speed. For example, in financial reporting or compliance data processing.

Asynchronous

Asynchronous pipelines, on the other hand, allow different stages of the pipeline to run independently. One step doesn’t have to wait for the previous one to finish. For example, while data is still being extracted, transformation and loading can start happening in parallel. This results in faster processing and reduced latency.

It’s best suited for situations where speed and responsiveness are critical. If your business relies on immediate insights—like in fraud detection or real-time recommendations—an asynchronous pipeline is the way to go.

By Deployment Environment

On-Premise

On-premise data pipelines run entirely within your organization’s servers and infrastructure. This means all the data processing, storage, and management happens on physical servers your team owns and maintains. While this gives you full control over your data and security measures, it also means higher costs for hardware, maintenance, and scaling.

These pipelines are beneficial when data privacy is a top priority. This applies to industries with strict compliance requirements (healthcare or finance). It’s also ideal for companies that already have substantial on-site infrastructure in place. However, scaling can be a challenge since expanding your capacity often involves buying new hardware.

Cloud-Based

Cloud data pipelines, on the other hand, run entirely on cloud platforms. In this setup, the cloud provider handles storage, scaling, and management of your pipeline. There are a few types of these setups:

- AWS Data Pipeline is a cloud-based service that helps you automate the movement and transformation of data across different AWS services and on-premise systems. It supports data extraction, processing, and loading. So, it’s ideal for batch processing, ETL workflows, and integration with Amazon S3 and Redshift.

- Azure Data Factory pipeline allows you to create and manage data pipelines in the cloud. It supports data integration, ETL processes, and real-time data streaming.

- Google Cloud Dataflow is a fully managed service for stream and batch data processing. It provides an easy way to build data pipelines that can scale dynamically. It integrates with other Google Cloud services—BigQuery and Cloud Storage.

Cloud-based pipelines reduce the cost of maintaining physical servers and provide the scalability needed to handle dynamic workloads. They are great for businesses that need to scale quickly or handle fluctuating data volumes. They are also ideal for companies that prioritize flexibility and want to leverage cloud-native tools for analytics, machine learning, or real-time processing.

Hybrid

Hybrid pipelines combine both on-premise and cloud environments. In this setup, part of your data processing happens on-premise, while other parts run in the cloud. This gives you the best of both worlds—maintaining control over sensitive data on your own servers, while leveraging the cloud for scaling, storage, or processing power.

This type of pipelines offers flexibility without sacrificing control. They’re ideal for businesses with legacy systems that still need to manage sensitive data on-site but also want the scalability and cost benefits of the cloud.

By Automation

Manual

Manual data pipelines rely on human intervention at each stage of the process. It’s often used in smaller, less complex environments where data updates occur infrequently, and real-time processing isn’t a requirement.

The main advantage is control—you can oversee each step. However, building data pipelines manually comes with a high risk of errors due to the reliance on human input, and they can be time-consuming and inefficient for larger datasets. They are best suited for ad-hoc data tasks or small-scale operations.

Automated

Automated pipelines operate without human intervention. Once set up, they continuously process data according to your workflow, whether in real-time or in batches. These pipelines handle large volumes of data and ensure consistency and accuracy.

With automated pipelines, you eliminate the need for manual oversight, reducing the potential for human error and freeing up resources. They are perfect for businesses that require regular data updates, structured workflows, or repetitive tasks.

Event-Driven

Event-driven pipelines respond to triggers or events. For example, if new data is added to a system or a transaction occurs, the pipeline is activated to process and move the data.

This type of pipeline is ideal for use cases where immediacy is essential. For instance, in fraud detection, real-time analytics, or user activity monitoring. Event-driven pipelines are highly responsive and integrate with systems that generate continuous streams of data (IoT devices or cloud applications).

Data Pipeline Examples

Many modern data pipelines are deployed on cloud platforms. That’s exactly what we specialize in.

Let’s take a look at the examples of cloud data pipelines.

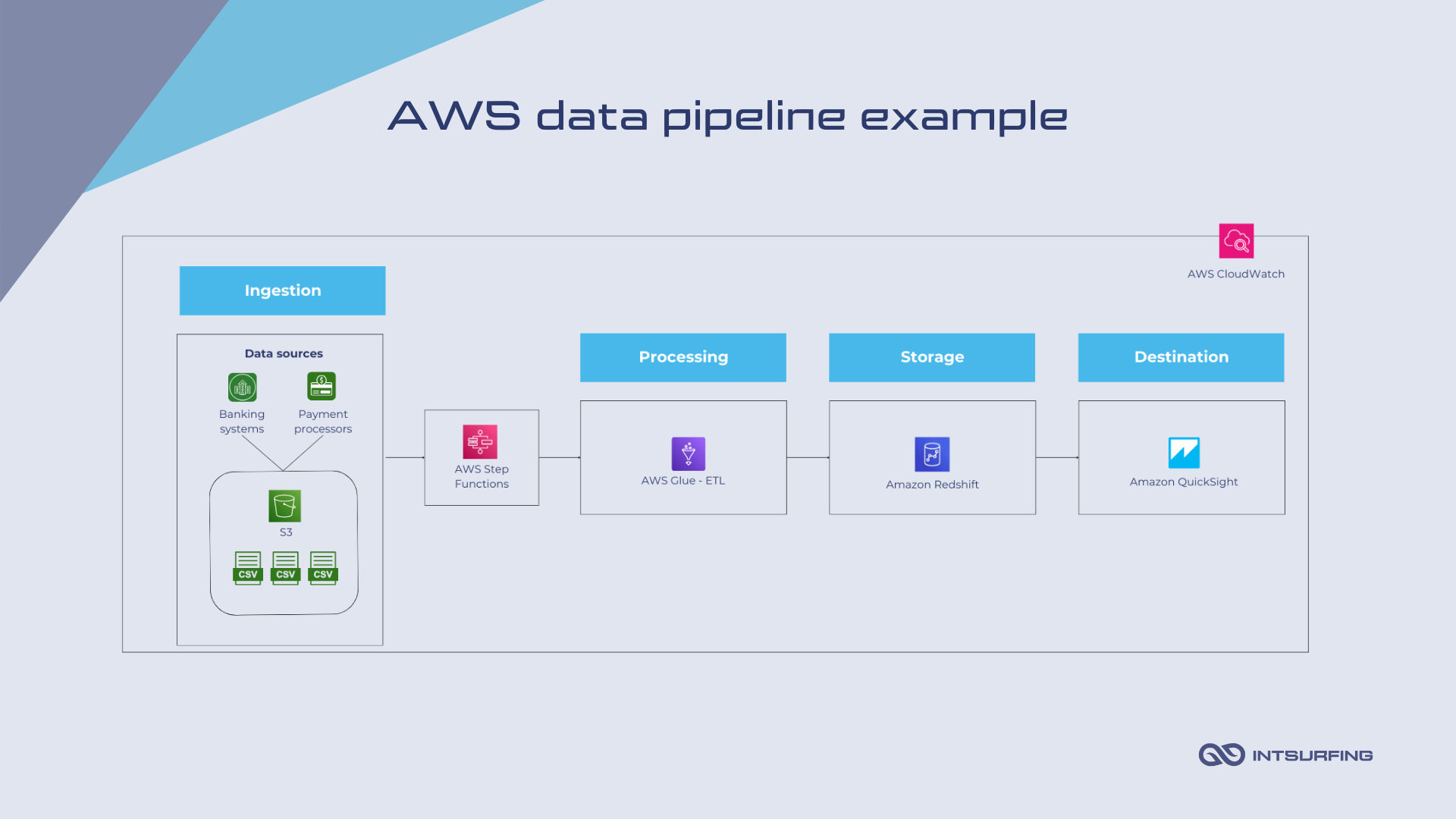

Batch AWS Data Pipeline for Financial Reporting

A financial services company wants to consolidate transaction data from bank transactions and payment gateways on a daily basis to generate financial reports and compliance documents.

The data pipeline will have the following components:

- Data ingestion (batch). Transaction data is collected daily and stored in AWS S3 as CSV files. These files are generated by banking systems and third-party payment processors.

- Data processing. AWS Glue is used to extract the data from S3, clean it, and perform ETL operations. It joins, filters, and aggregates the data.

- Data storage. The transformed data is loaded into Amazon Redshift, AWS’s data warehouse solution.

- Data analytics. Once the data is in Redshift, Amazon QuickSight is used to build financial reports and dashboards for internal stakeholders and compliance teams.

There is also AWS Step Functions. It manages the orchestration of this batch pipeline. Step Functions ensure that AWS Glue jobs start once the data is uploaded to S3 and automatically trigger the load to Redshift after processing is complete.

AWS CloudWatch also monitors the pipeline and tracks the performance of Glue jobs, Redshift queries, and S3 uploads. CloudWatch also provides alerts for job failures or delays.

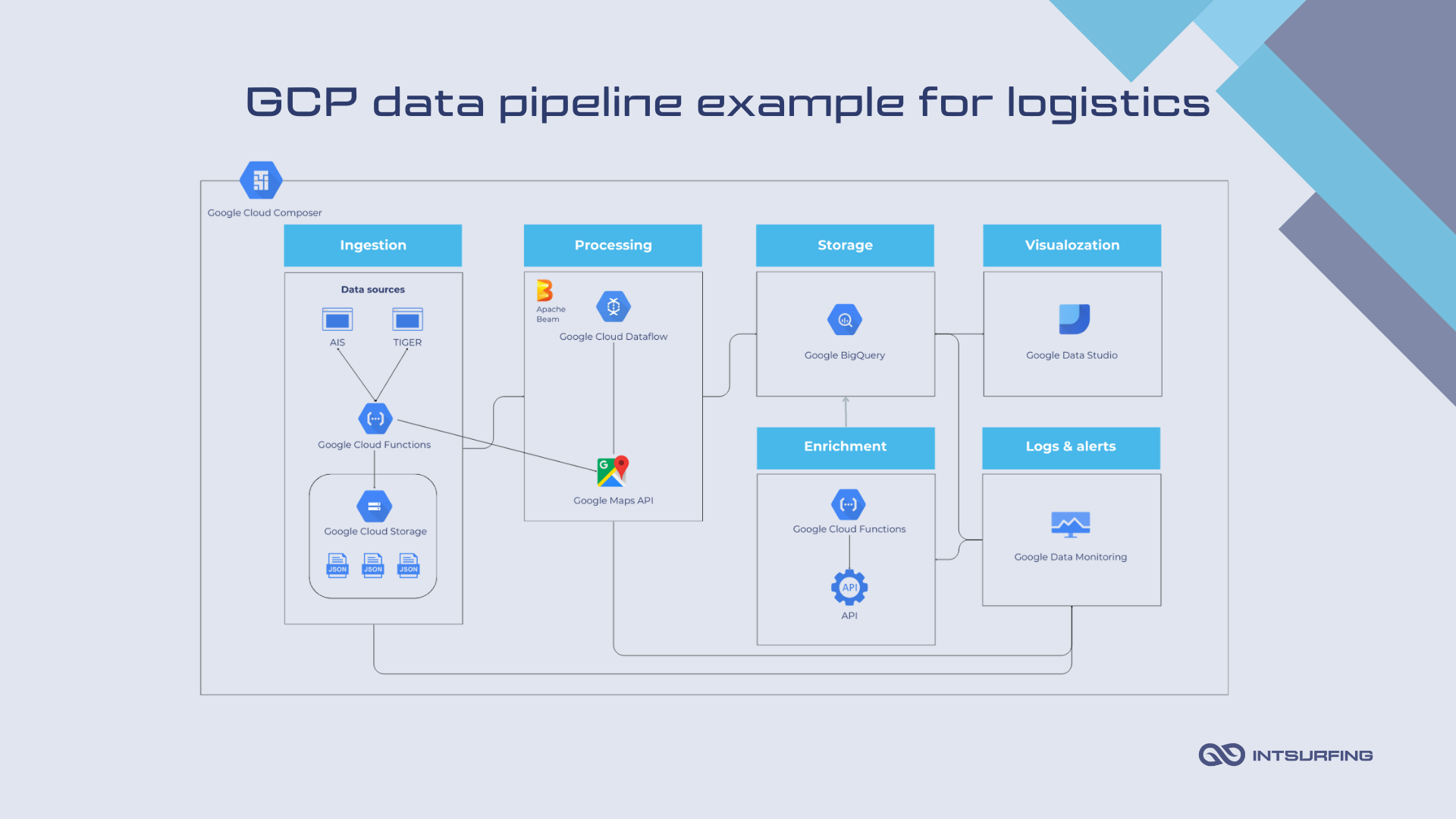

GCP Data Pipeline for Logistics

A logistics company wants to build an address processing system to ingest and process address data from TIGER (Topologically Integrated Geographic Encoding and Referencing) and AIS (Address Information System) databases. The system will standardize, parse, and geocode these addresses for further use in routing and logistics optimization.

-

Data ingestion. TIGER and AIS provide datasets that are ingested into the system. Google Cloud Functions fetches the latest datasets from TIGER and AIS. Cloud Functions periodically call the external sources to ingest the data. The raw data from TIGER and AIS is stored in Google Cloud Storage (GCS) as JSON.

-

Data processing. Once the address data is ingested, Dataflow, based on Apache Beam, parses and cleans the addresses. This involves:

- Breaking down the address components (street number, street name, city, state, zip code).

- Since address formats vary across TIGER and AIS), the system brings the addresses to a consistent format.

- Dataflow integrates with Google Maps Geocoding API to convert addresses into geographic coordinates (latitude and longitude) for geospatial analysis and routing optimization.

- The cleaned and geocoded data is transformed into the required structure.

-

Data storage. After the addresses are parsed, cleaned, and geocoded, they are loaded into Google BigQuery. BigQuery serves as a centralized warehouse where the logistics company runs queries to analyze and retrieve the processed address data. Queries involve selecting all geocoded addresses within a specific city or postal code.

-

Data enrichment. For retrieving demographic data or traffic information based on geocoded addresses, Cloud Functions calls external APIs and enriches the address data stored in BigQuery.

-

Data visualization. Google Data Studio provides dashboards and visualizations based on the geocoded address data in BigQuery. The logistics team uses these reports to visualize clusters of addresses, analyze delivery routes, or assess geographical coverage.

Additionally, Cloud Composer helps manage the entire workflow. It makes sure everything happens in the right order—from pulling in data from TIGER and AIS, to parsing, geocoding, and storing it in BigQuery. Plus, with Composer, you can schedule recurring tasks.

To keep an eye on the pipeline, you can use Google Cloud Monitoring and Google Cloud Logging. They track the pipeline’s performance, log any events, and spot errors. If something goes wrong—like a data ingestion failure or an issue with address processing—alerts are set up to notify the team right away.

Wrapping It Up

Data pipelines make it possible for businesses to be faster, smarter, and more efficient. By automating the process, pipelines take the hassle out of data management, giving you real-time access to the information you need. Whether you’re processing data in batches or streaming it live, pipelines ensure your data is always ready for analysis.