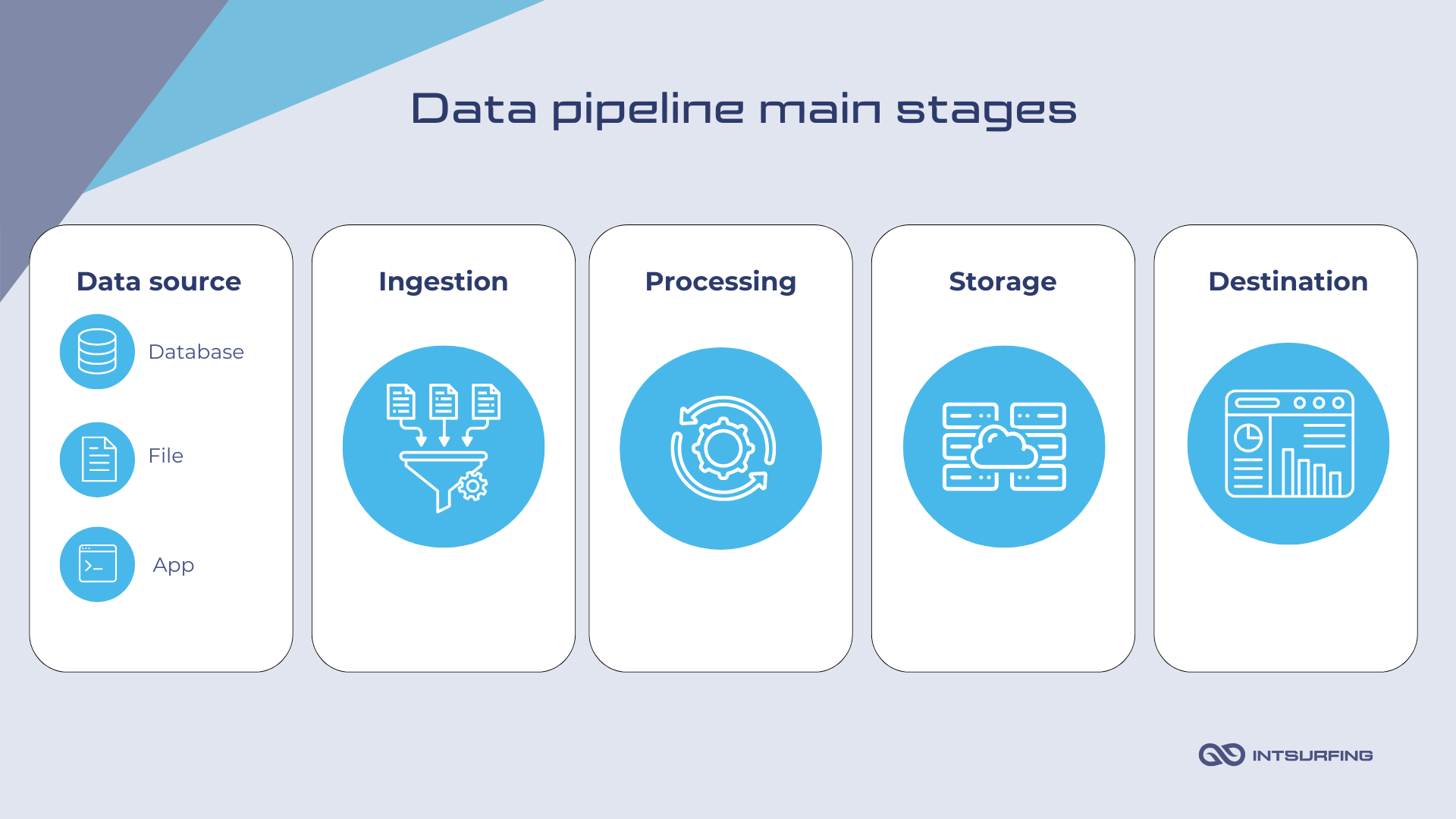

You’ve set up an ETL pipeline on AWS. It works. Data moves. Reports generate.

But over time, latency spikes.

Storage bills grow. The system hasn’t failed—it’s just not running at its best. The good news is that you can optimize the system.

In this article, we’ll break down how to fine-tune your workflows. You’ll learn how to make AWS work for you—without overspending or overcomplicating.

Signs Your Amazon Cloud ETL Pipeline Needs Optimization

As datasets grow, jobs take longer, cloud bills climb, and failures become more frequent. Many teams only react when problems become critical—but by then, optimization is much harder.

In this section, we’ll cover the common signals that your AWS ETL pipeline needs tuning.

1. Slow Processing Times

Let’s look at the case shared by Amazon.

Pionex, a trading platform, struggled with high-latency data processing. Their existing system couldn’t process the vast amount of market data fast enough. The company achieved a 98% reduction in data processing latency, decreasing it from 30 minutes to less than 30 seconds. Additionally, they reduced deployment and maintenance costs by over 80% by optimizing the system.

Sure.

Not every company needs extreme speed like Pionex, but every business needs reliability.

Slow processing times often point to underlying inefficiencies in how your cloud based ETL pipeline is handling compute resources and data distribution. The longer it takes to transform and load data, the more it affects decision-making, real-time analytics, and overall system performance.

2. High AWS Costs

If your AWS costs keep rising but your ETL jobs aren’t running any faster, something is off. More spend doesn’t always mean better performance—it often means wasted resources.

You may need to look at Glue job runtimes. The longer they run, the more you pay. Use AWS Cost Explorer to check if you’re burning DPUs on tasks that should complete faster. If you’re using S3 as a staging area, check request costs—high API calls for small reads and writes can quietly drain your budget. Running EMR Spark jobs? Look for underutilized instances. A cluster that scales too aggressively can rack up charges while most of the compute power sits idle. And if your pipeline moves a lot of data between services or AWS regions, those transfers could be eating a large chunk of your budget.

3. Frequent Job Failures

A well-optimized ETL pipeline AWS shouldn’t fail every other run. If yours does, something deeper is broken.

- Memory issues are a common cause—large datasets or inefficient transformations can push Glue jobs past their memory limits, triggering OOM failures.

- Partition imbalance is another culprit. If one executor gets 80% of the workload while others sit idle, Spark jobs can time out.

- Running on EMR? Check YARN’s resource allocation—if jobs are starving for memory, they will crash.

- Pay attention to data source reliability. If an upstream system delivers corrupt files or inconsistent schemas, jobs can fail before they even start processing.

4. Unpredictable Pipeline Behavior

We’ve seen this problem firsthand. One of our clients came to us with an outdated ETL address parsing system. The problem was that requests were processed with inconsistent delays. Worse, the system lacked the tools to diagnose or fix these delays in real time.

This kind of unpredictability can happen for many reasons. Autoscaling that reacts too late. Jobs that process different partitions at wildly different speeds. Schema changes that break transformations without warning.

These issues create a pipeline you can’t rely on.

5. High I/O and Bottlenecks in Data Storage

Slow cloud ETL jobs aren’t always a compute problem. Sometimes, the real issue is storage.

If your pipeline relies on S3, check for these issues:

- Too many small files – Writing thousands of tiny objects instead of batching data into larger files can overwhelm S3. Look at ListObject API calls to see if your pipeline is generating unnecessary storage requests.

- High GET/PUT request counts – Excessive API calls mean your pipeline is reading and writing inefficiently. Check CloudTrail logs to track where these requests are coming from.

For Redshift workloads, storage problems often show up in query slowdowns:

- Long-running queries – If queries take longer than expected, check the Query Execution Plan for bottlenecks in scanning or joins.

- Skewed data distribution – Unevenly distributed data means some slices process more than others, forcing queries to wait for the slowest node. Use distribution keys to balance the load.

If your ETL jobs feel sluggish, don’t just throw more compute at them—storage optimization might be the fix.

6. Inefficient Queries

Not all data needs to be copied. But in many ETL on the cloud, data moves back and forth between services without a clear reason. Every transfer adds latency, increases costs, and strains resources. If your AWS bill is higher than expected, unnecessary data movement might be a big part of the problem.

Let’s look at S3 to Redshift copy operations. Are you reloading full datasets every time instead of applying incremental updates? Copying everything over might seem like a safe approach, but it’s expensive and slow.

Query performance suffers from similar inefficiencies. Athena queries, for example, can be deceptively expensive if they scan entire datasets. Without proper partitioning, even a simple lookup can trigger a full table scan.

How to Optimize Cloud-Based ETL Architecture

You can’t fix an ETL pipeline without knowing what’s wrong. Before making changes, you need to look at the whole system—how data moves, where delays happen, and what’s consuming the most resources. Sometimes, the problem is storage. Other times, it’s inefficient queries or unnecessary data transfers.

We see these patterns all the time. Some solutions are simple, others not that much. Here are a few of the most effective ways we optimize cloud-based ETL pipelines.

Compute Optimization

AWS offers multiple ETL cloud services. Picking the wrong one—or failing to optimize—can lead to slow jobs and unnecessary costs.

Choosing the Right ETL Engine

AWS Glue, EMR, and Lambda all support ETL workloads, but they serve different purposes:

- AWS Glue – A serverless ETL engine that simplifies management but can get costly for large-scale jobs. Best for batch processing and structured transformations.

- Amazon EMR (Spark) – A flexible big data processing platform that requires manual tuning. Ideal for large-scale ETL and machine learning workloads.

- AWS Lambda – A serverless function-based approach that’s good for event-driven ETL but has strict memory and execution time limits (max 15 minutes).

Picking the right engine depends on the size, complexity, and frequency of your ETL jobs. But even after making the right choice, tuning matters—especially for Spark-based workloads.

Optimizing Spark in AWS Glue and EMR

A poorly optimized Spark job can take more time than you expected. If processing is slow, the issue is often how Spark manages memory, partitions, and shuffle operations.

Here’s how to speed up Spark jobs in AWS Glue and EMR:

- Reduce shuffle operations – Large

groupByorjoinoperations trigger excessive data shuffling between nodes. Use broadcast joins when possible to minimize data movement. - Adjust memory settings – Fine-tune

spark.executor.memoryandspark.driver.memoryto prevent out-of-memory errors and unnecessary garbage collection. - Optimize parallelism – Set

spark.sql.shuffle.partitions=200(or another appropriate value based on cluster size) to balance processing across nodes and avoid too many small tasks. - Use columnar file formats – Store data in Parquet or ORC instead of CSV or JSON to reduce I/O overhead.

- Enable dynamic allocation (EMR only) – Let Spark scale executors up and down automatically based on workload demand.

Storage Optimization

Whether you use Amazon S3, Redshift, or DynamoDB, the right setup makes all the difference. A well-optimized storage strategy reduces I/O overhead, speeds up analytics, and keeps costs under control.

Here is the general tips on how you can optimize storage for your Amazon ETL:

- Choose the right format – Columnar formats (Parquet and ORC) reduce I/O and improve query speed. Raw CSV files slow the system down and take up more space.

- Partition data logically – In S3, partitioning by date, region, or another high-cardinality field speeds up queries. In Redshift, using the right distribution key prevents some nodes from overloading.

- Compress data to reduce processing time – Use Snappy for fast decompression, Gzip for maximum compression, or Zstd for a balance of both.

- Avoid too many small files – Thousands of tiny objects slow down S3 and make Redshift queries inefficient. Batch and merge data before storing it.

- Manage storage lifecycle – Move rarely accessed data to S3 Glacier instead of keeping it in expensive tiers. Use TTL policies in DynamoDB to delete old records automatically.

Cost Optimization

Many teams unknowingly overspend on AWS due to inefficient ETL design. Because, frankly speaking, cloud computing ETL pipelines don’t always need the highest compute tier or the most aggressive scaling. Sometimes, small changes—choosing the right instance type or reducing API calls—can make a big difference. Let’s go through the most effective ways to lower costs without sacrificing performance.

Reserved vs. Spot Instances: When to Use Each

AWS pricing models can be confusing, but picking the right one can slash compute costs.

- Reserved Instances – Best for predictable workloads. If your ETL jobs run on a schedule, reserving capacity for one or three years locks in lower rates.

- Spot Instances – Ideal for non-urgent jobs that can handle interruptions. If your workload can tolerate sudden shutdowns and retries, spot pricing can cut costs by up to 90%.

For critical workloads, a mix of on-demand and spot instances works best. Run essential jobs on reserved or on-demand instances, and use spot instances for less urgent processing.

Autoscaling and On-Demand Compute

Paying for unused resources is a waste. Autoscaling helps, but only if configured correctly.

- Right-size your instances – Monitor CPU and memory usage. If your nodes are barely working, downgrade to a smaller instance type.

- Scale based on actual demand – Don’t just set fixed instance counts. Use AWS Auto Scaling to adjust resources dynamically based on queue length and processing time.

- Limit over-provisioning – Some workloads need a buffer, but adding too many nodes “just in case” racks up costs fast.

Monitor Spending with AWS Cost Explorer and Trusted Advisor

Guessing where your money goes isn’t an option. AWS Cost Explorer breaks down service-level expenses, so you can see which part of your cloud pipeline ETL is eating the budget. AWS Trusted Advisor helps flag cost-saving opportunities. In particular, unused resources or underutilized instances.

Set up alerts in AWS Budgets to avoid surprises. If costs spike unexpectedly, investigate before the bill arrives.

Avoid Unnecessary API Calls and Data Transfers

Frequent API calls and redundant data movement drive up costs.

- Reduce excessive S3 API requests – If your pipeline constantly reads and writes small files, batch operations instead.

- Minimize inter-region data transfers – Moving data across AWS regions adds hidden costs. Keep processing close to where data is stored.

- Optimize Glue and Redshift usage – Avoid full table scans in queries. Partition data correctly to minimize unnecessary reads.

Every part of the pipeline, from compute to storage, offers ways to save if you know where to look.

Data Transfer & Network Efficiency

One of the most overlooked ETL optimizations is data movement. Every time data is transferred across AWS services (e.g., from S3 to Redshift), it incurs latency and costs.

Here is what you can do to minimize data movement:

- Process data in-place: Instead of moving large datasets, use AWS Glue’s pushdown predicates to filter unnecessary records before processing.

- Use VPC endpoints: Prevent cross-AZ network charges by keeping data transfer inside AWS’s private network.

- Keep workloads in the same AWS region: Moving data across regions significantly increases cost and latency.

Orchestration & Dependency Management

An ETL job runs. Then another. But what happens when the second job starts before the first one finishes? Or when a failure goes unnoticed, breaking downstream data?

A good orchestration strategy controls dependencies, schedules tasks, and catches failures before they cause problems. Without it, even a well-built ETL pipeline can turn into a mess of unpredictable behavior and rising costs.

Let’s go over the key ways to keep ETL workflows structured, scalable, and reliable.

Step Functions vs. Managed Airflow vs. Glue Workflows

AWS offers multiple cloud ETL technologies for orchestration. Choosing the right one depends on how complex your workflows are and how much control you need.

- Step Functions – Best for simple, serverless ETL workflows that don’t require deep customization. Works well when chaining Lambda functions or managing state between Glue jobs.

- Managed Workflows for Apache Airflow (MWAA) – A good choice if you need fine-grained control over task execution, retries, and dependencies. Works well for multi-step ETL processes that involve multiple services, not just AWS.

- Glue Workflows – Designed specifically for AWS Glue. If your pipeline is built mostly around Glue jobs, this is the simplest way to manage execution order and dependencies.

For lightweight workflows with a few steps, Step Functions often work best. If you need complex DAG-based orchestration with conditional logic, retries, and failure handling, Airflow is the better option.

Event-Driven vs. Scheduled Orchestration

How you trigger ETL jobs affects both performance and cost. Some workflows run best on a fixed schedule, while others should start only when new data arrives.

- Event-driven pipelines – Use Lambda with SNS/SQS for near real-time processing. If data lands in an S3 bucket, a Lambda function can trigger the ETL job instantly. This reduces idle time and prevents unnecessary runs.

- Scheduled pipelines – Best for batch jobs that need to run at specific times (e.g., nightly aggregations). Use Cron jobs, Step Functions, or Airflow schedules to define fixed execution times.

Event-driven ETL is more dynamic, reducing unnecessary processing when there’s no new data. Scheduled ETL is more predictable, making it easier to plan resource allocation.

Monitoring & Alerting

No matter how well you design an AWS ETL architecture, failures will happen. The key is catching them early and having the right tools in place to diagnose the issue.

- CloudWatch – The first place to check for AWS service logs and metrics. Set up alarms for job failures, high memory usage, or unexpected execution times.

- AWS X-Ray – Helps trace requests through complex workflows, especially when debugging Step Functions and Lambda-based ETL jobs.

- Prometheus & Grafana – Best for custom monitoring and visualizing ETL performance metrics. Works well if you’re running ETL on EMR clusters or Kubernetes-based environments.

Setting up alerts for slow-running jobs, unexpected failures, or resource spikes saves time and prevents costly surprises. A pipeline without monitoring is just waiting for a silent failure.

Conclusion

A well-optimized ETL in the cloud delivers fast, reliable data without breaking the budget. That means tuning compute, fixing storage inefficiencies, and managing workflows properly. If something feels slow or costly, don’t ignore it—investigate, optimize, and track improvements. Small changes add up, and a well-structured pipeline stays performant no matter how much data flows through it.