Let’s say you’ve got millions of PDFs and you need to parse unstructured data from them.

Sounds not that hard, right?

Not when the PDFs are scanned images cluttered with tables and multi-column layouts.

Parsing them manually or with traditional tools becomes a losing game.

We’ve found a solution to how to parse a PDF file. We’ve used Anthropic reasoning for that.

In this article, we’ll show you how we made it work in one of our projects.

Understanding the Problem of PDF Data Parsing

In the recent data parsing project, we had to process 18 million PDF files, all containing financial reports.

Our goal was to extract company turnover data. But was that simple?

Nope.

Because we had to deal with a few things:

- The files had varied layouts. They often contained tables, plain text, or detailed descriptions. The turnover data could appear almost anywhere.

- Identifying the data we needed was complex. Some patterns helped—turnover data was often on the initial pages, and the keyword “turnover” allowed us to filter irrelevant files.

- Parsing 18 million files on a single virtual machine or EC2 instance would have taken months. This wasn’t feasible within the project’s deadlines.

These challenges made it clear we couldn’t rely on traditional methods alone. We needed a smarter, AI-driven approach to tackle the scale and complexity of this project.

However, implementing AI for Python data parsing was easier said than done.

Initially, Anthropic produced inconsistent outputs. Numbers were rounded, returned as text, or inaccurately placed.

Here is what we did to make things work.

How to Parse Data from PDF: Our Solution

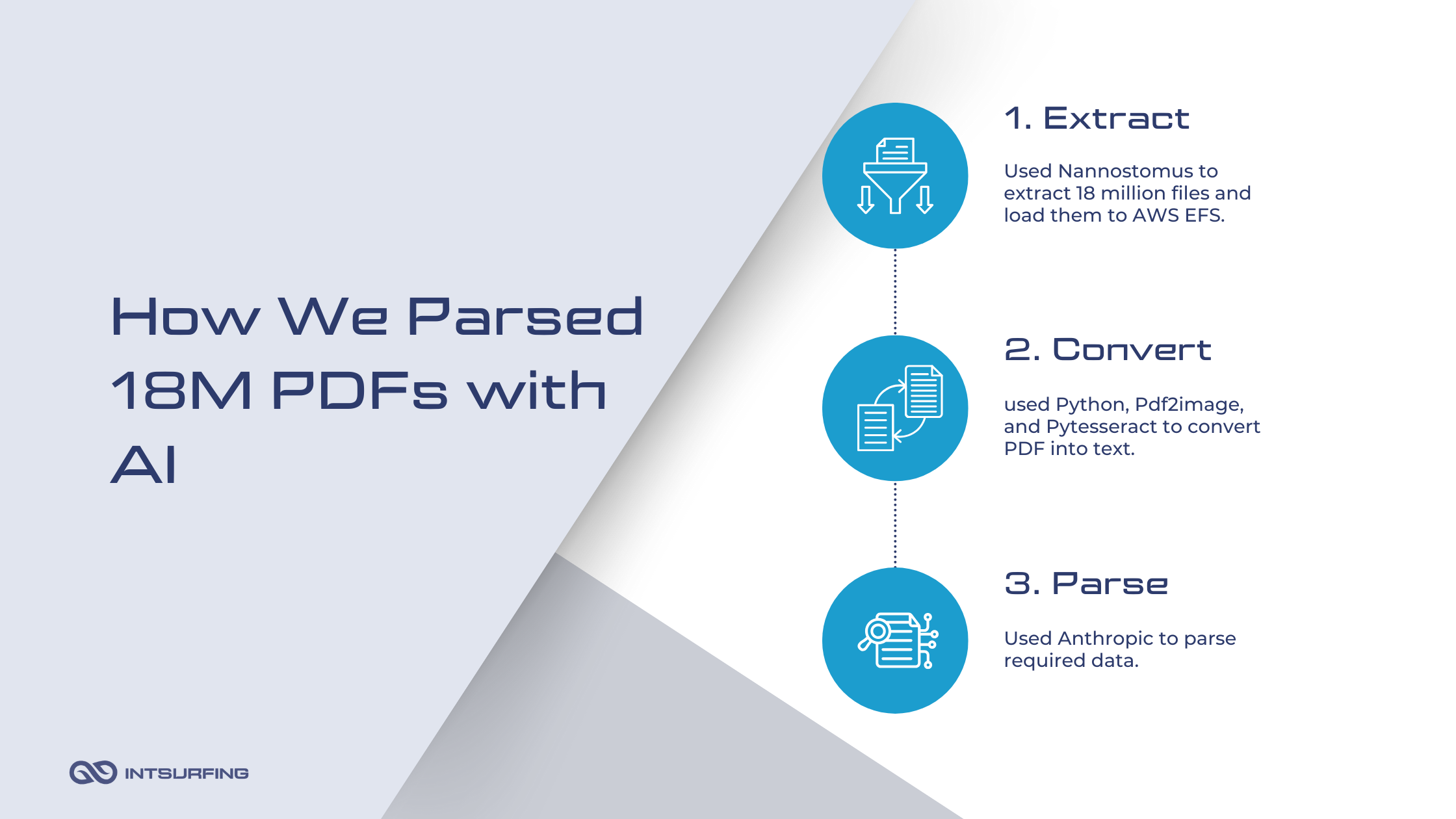

To tackle the challenges of parsing 18 million PDFs, we designed a streamlined, AI-powered workflow that combined automation, scalable infrastructure, and data extraction techniques. The process was broken into three key steps:

- Extracting PDF files and organizing them. First, we downloaded all 18 million files in AWS EFS. Files were organized for easy access, and worker lifecycle automation kept operations efficient and costs low.

- Converting PDFs to text using Python, Pdf2image, and Pytesseract. You can learn more about how to convert a PDF to text in this article.

- Parsing PDF data with Anthropic. We implemented structured prompts and fine-tuned subprompts to achieve consistent and accurate extraction of both turnover values and corresponding years. The results were saved into structured CSV files for easy analysis.

Role of Anthropic Software in Getting Data from a PDF Using Parsing

Before we move further, let’s figure out what Anthropic is.

Anthropic is a company that builds AI systems. They create AI tools that are easy to use, aligned with human values, and less likely to produce harmful or biased results. Their technology is used to process and analyze large amounts of data, answer questions, and automate tasks, often in complex or technical fields.

We’ve chosen this platform because of several things.

First, its Python SDK simplifies integration. The setup is straightforward: import the library, initialize the client with your API key, and start crafting prompts. And, of course, Its integration with Python makes it easy to manage token counting and cost monitoring. You can calculate input and output tokens per request, giving you full control over resource usage.

Second, Anthropic is compatible with JSON schemas. You can define what the output should look like—complete with required fields—and the AI will follow those rules.

How to Parse Data from PDF using Anthropic

Using Anthropic to parse data type involves setting up its Python SDK and defining structured prompts to extract specific information.

In this project, we used “claude-3-haiku-20240307". You can find more models here.

Here’s a step-by-step guide to getting started:

- Import the Anthropic SDK and define constants for interacting with the API.

from anthropic import Anthropic, HUMAN_PROMPT, AI_PROMPT

- Create a client instance to authenticate and communicate with the Anthropic API. You’ll need an API key to proceed.

client = Anthropic(api_key=ANTHROPIC_EMERSON_KEY)

- Define the message you’ll send to Anthropic. This message should include:

- Anthropic model (e.g., claude-3-haiku-20240307).

- Token limit for the response.

- User prompts and content to parse.

- JSON Schema object with the expected parameters.

Here’s an example for extracting turnover data:

message = client.messages.create(

model=anthropic_model,

max_tokens=1024,

messages=[{"role": "user", "content": content}],

tools=[

{

"name": f"get_possible_value",

"description": f"${KEY_WORDS} value from companies accounts info",

"input_schema": {

"type": "object",

"properties": {

"year": {

"type": "number",

"description": "The year information provided",

},

"value": {

"type": "number",

"description": f"The exact value of the ${KEY_WORDS}",

}

},

"required": ["value"],

},

}

],

)

- Once Anthropic processes the input, you can extract the results. Use logic to locate relevant data blocks to ensure only the required fields are returned.

tool_use_blocks = []

if source and isinstance(source, list):

for block in source:

if hasattr(block, "type") and block.type == "tool_use" and hasattr(block, "name") and block.name == "get_possible_value":

tool_use_blocks.append(block.input)

if isinstance(tool_use_blocks, list):

tool_use_blocks = json.dumps(tool_use_blocks)

You can dynamically adjust the KEY_WORDS and schema to match the data points you’re looking for. For instance, replace “turnover” with any keyword relevant to your dataset.

How to Calculate the Cost of Batch PDF Data Parse with Anthropic

Anthropic’s pricing is token-based, meaning every input and output token contributes to the total spend. Here’s how we monitored and optimized costs during our project.

You can calculate the number of tokens in both the input (text sent to the API) and output (response received).

Here’s the Python code we used to monitor costs:

# Count input and output tokens

in_tokens = client.count_tokens(relevant_text)

out_tokens = client.count_tokens(str(message.content))

# Calculate total API spend

def calculate_api_spend(in_tokens, out_tokens):

input_spend = (in_tokens / 1000) * 0.00025

output_spend = (out_tokens / 1000) * 0.00125

return input_spend + output_spend

# Print a processing summary

print("\n--- Processing Summary ---")

print(f"Total files processed: {total_files}")

print(f"Total input tokens: {total_in_tokens}")

print(f"Total output tokens: {total_out_tokens}")

print(f"Total API spend: ${total_spend:.5f}")

How to Reduce Costs with Targeted Text Processing

To optimize costs, we reduced the amount of text sent to Anthropic. Instead of sending entire documents, we extracted only the relevant parts—lines or characters surrounding specific keywords.

This approach reduced the token count by 4–5 times.

Here’s the function we used to trim the input:

def extract_relevant_chars(text):

relevant_text = []

for keyword in KEY_WORDS.split(","):

keyword = keyword.strip()

# Case-insensitive search for the keyword

matches = [m.start() for m in re.finditer(re.escape(keyword), text, re.IGNORECASE)]

for match_start in matches:

start = max(match_start - CHARS_BEFORE_KEYWORDS, 0)

end = min(match_start + len(keyword) + CHARS_AFTER_KEYWORDS, len(text))

relevant_text.append(text[start:end])

return "\n".join(relevant_text)

Final Words

Parsing 18 million PDFs required a robust and scalable solution, and Anthropic delivered exactly that. As we integrated Anthropic with Python and AWS, we created a workflow capable of extracting structured data from unstructured PDFs.

Using Anthropic’s JSON schema support, we ensured every output adhered to a predefined structure.

We trimmed input text (it included only relevant data surrounding keywords) and reduced token usage costs by 4–5 times.

With token usage tracking and cost calculation built into the workflow, we maintained full control over expenses and achieved an average cost of 0.6–0.7 cents per 1,000 files.