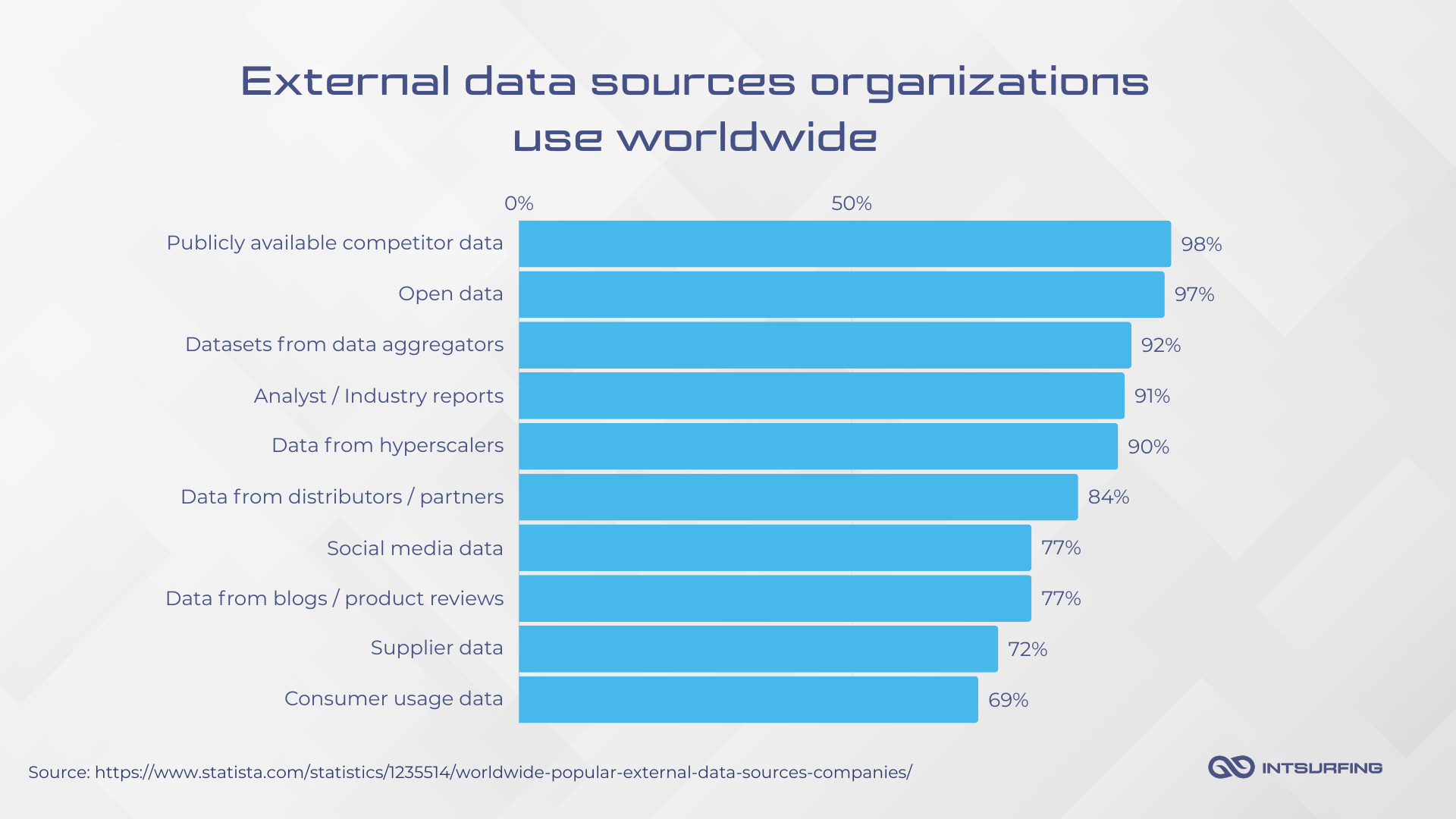

Data-driven companies don’t rely on a single source of information. They pull insights from competitor data, open datasets, proprietary reports, and industry aggregators.

That’s what Statista proves.

The more external data you collect, the sharper your decision-making.

But with external data, you’re dealing with different formats, missing values, inconsistent timestamps, and duplication issues. When you’re pulling from dozens of sources, these challenges only multiply.

For this scenario, ETL (Extract, Transform, Load) is the backbone of any solid data infrastructure. If you would like to discover what is ETL in detail, we invite you to read this article. But if you’re ready to discover how to implement this system in your architecture, stick with us.

In this guide, we’ll break down six essential steps to ETL development process.

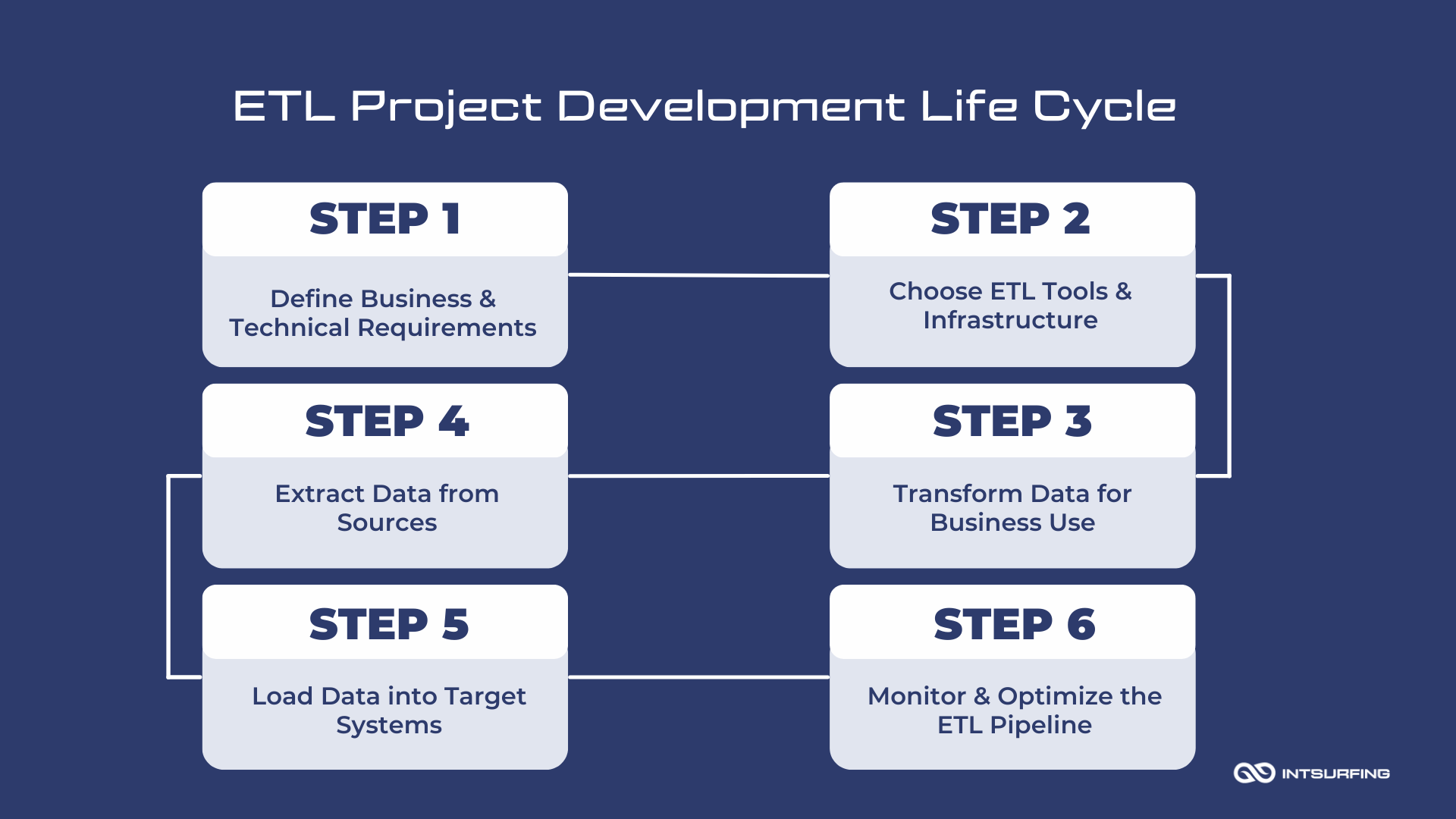

What Is an ETL Project Development Life Cycle?

The exact steps in your ETL project depend on your data sources, business goals, infrastructure, and compliance requirements.

To structure the process, experienced ETL solution architects map out a custom roadmap. However, most projects follow a similar lifecycle:

Let’s start with the foundation—defining your business and technical requirements.

Step 1: Define Business & Technical Requirements

A well-planned ETL process should serve your business needs and fits into your technical ecosystem.

Let’s break it down.

Business Perspective

Your ETL pipeline must align with how your business uses data. That means defining:

- Where your data is coming from. Are you pulling from databases, APIs, flat files, or third-party aggregators?

- What you’ll use the data for. Real-time analytics, reporting, and machine learning all have different processing needs.

- Compliance & security requirements. If your industry is regulated (think GDPR, HIPAA, SOC 2), your ETL process needs built-in safeguards.

- Performance expectations. How fresh should your data be? What’s the acceptable latency? Do you need guaranteed SLAs?

Technical Perspective

Once the business goals are clear, it’s time to set up the technical foundation. The right choices here ensure your ETL pipeline can handle your data’s volume, speed, and complexity.

- Data structure matters. Will you be dealing with structured SQL databases or unstructured sources (for example, logs and JSON files)?

- Batch or real-time? If data flows in slowly, batch processing may be enough. But for live dashboards and AI models, real-time streaming is a must.

- Cloud or on-prem? AWS, GCP, and Azure offer scalable ETL solutions, but on-premises setups give you more control.

- Integration with existing tools. Your ETL should work well with reporting systems or other tools you use.

Making these decisions before development starts saves time, resources, and costly adjustments later.

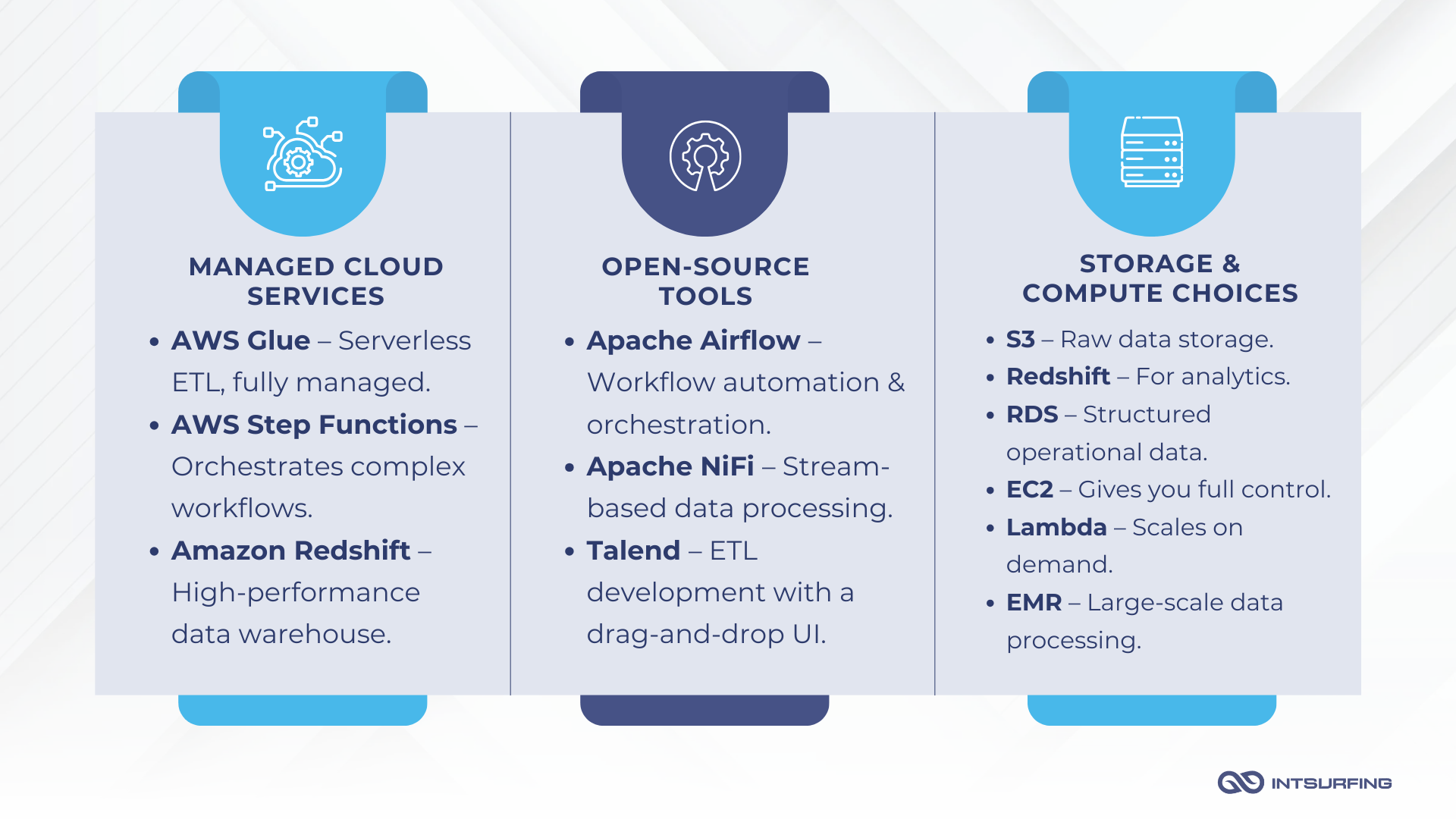

Step 2: Choose the Right ETL Tools & Infrastructure

The tools and infrastructure you choose should fit your budget, performance needs, and long-term strategy.

To make the right call, you need to look at it from two angles: business and technical.

Business Considerations: What Works for You?

Think about scalability, costs, and flexibility before committing to an ETL solution.

Do you go with a fully managed service (AWS Glue, Google Dataflow) that reduces maintenance, or do you self-host an open-source solution Apache Airflow for better customization?

Your data volume will grow. Will your ETL pipeline handle more sources, larger datasets, and higher query loads without slowing down or requiring costly upgrades?

Going all-in on AWS Glue or Google Dataflow makes scaling easy, but it also means you’re locked into that ecosystem. Open-source alternatives give you more control and avoid platform dependency.

Technical Considerations: Picking the Right Stack

Your ETL setup defines how data flows, transforms, and delivers value. The right choice of ETL tools depends on how much control you need, how fast you need to deploy, and how well it fits your existing systems.

A managed ETL service (AWS Glue or Google Dataflow) handles scaling, maintenance, and performance tuning. It’s ideal if you need to move fast and focus on business logic instead of infrastructure. But here, customization is limited, costs scale with usage, and switching providers can be difficult.

Custom-built ETL using Apache Airflow gives full control over workflow orchestration, transformations, and infrastructure choices. It requires more setup and maintenance but avoids vendor lock-in and gives you more space to optimize costs over time.

Besides, your tools must work with databases, BI platforms, and data warehouses without creating extra work.

Step 3: Extract Data from Sources

The goal of this step is to get data. But how you do it depends on the source.

- Databases. A direct connection to a PostgreSQL, MySQL, or MongoDB database allows structured extraction through queries or replication. You define which tables, columns, and records to pull based on timestamps, IDs, or event logs.

- APIs & Web Services. External APIs come with rate limits, authentication steps, and format constraints (JSON, XML, CSV).

- Flat Files (CSV, JSON, Parquet). Businesses often receive bulk data from vendors in S3 buckets, FTP servers, or email attachments. Automated file watchers trigger the extraction process once a new file appears, processing it on the fly.

- Data Aggregators. They consolidate information from different sources, but they don’t always provide a direct pipeline.

Let’s say you’re pulling pricing data from a market research aggregator. There are two common approaches:

- API Access: The cleanest method, but data updates depend on the provider’s refresh cycle.

- Scraping & Parsing: If API access is unavailable, you extract data from reports, tables, and dashboards using a web scraping pipeline.

Best practice: Always prefer an API if available, but be ready to build a robust extraction flow for sources that don’t provide direct access.

Handling Failures: When Data Sources Let You Down

Let’s be real—data sources aren’t always reliable. APIs can go down, network timeouts happen, and files may arrive late or be incomplete. If your ETL pipeline doesn’t have a plan for this, you’re in trouble.

First, don’t panic—retry. When an API fails, try again. But don’t hammer it with endless requests. Use exponential backoff, meaning each retry waits a little longer. If it’s still down after multiple tries, log it, flag it, and move on.

Second, have a backup plan. If fresh data isn’t available, what’s the next best thing? Should you use the last known dataset or pause everything until new data arrives? Define this upfront—because guessing in the moment won’t work.

And finally, double-check everything. Missing columns, half-filled records, broken formats—they sneak in more often than you’d think. Before your pipeline moves data forward, run integrity checks.

Failures will happen. What matters is how your ETL pipeline handles them.

Step 4: Transform Data for Business Use

Before your data can drive decisions, it needs cleaning, structuring, and optimization. And this step is exactly about that.

Business Needs: Making Data Work for You

Messy data leads to bad insights. You don’t want that. That’s why standardization is the first step. Column names, timestamps, currencies—everything needs to follow a single format. Otherwise, reports break, dashboards show misleading trends, and machine learning models fail.

Business rules come next. If you’re working with global data, currency values need to be converted. If you’re aggregating sales numbers, do you need totals by day, week, or region? These rules must be baked into your ETL logic so data is processed exactly how your business needs it.

Duplicate records? They inflate results. Anomalies? They skew trends. Cleaning up data before it moves downstream prevents costly errors later.

Technical Implementation: Turning Rules into Code

Now, onto the heavy lifting—applying these transformations at scale.

For large datasets, AWS Glue with PySpark is a solid choice. It processes transformations in parallel, handling everything from format standardization to currency conversion.

To enforce schema consistency, AWS Glue DataBrew is a common choice. It automatically detects mismatched formats, missing values, and outliers, so bad data doesn’t slip through.

Performance also matters. Partitioning speeds up queries, indexing improves lookups, and caching cuts down redundant processing. These small optimizations make a big difference when handling large volumes of data.

Step 5: Load Data into Target Systems

Now that your data is clean and structured, it’s time for the final step—loading it into the right system for analysis, reporting, or operational use. But here’s the thing: not all target systems are the same. Where you load data depends on how it will be used, how often it needs updates, and how much you want to spend.

| Target System | Best For | Example Services |

|---|---|---|

| Data Warehouses | Analytics, BI, complex queries | Amazon Redshift, Google BigQuery, Snowflake |

| Data Lakes | Storing raw & semi-structured data for later use | AWS S3, Azure Data Lake, Google Cloud Storage |

| Relational Databases | Operational workloads, structured data storage | AWS RDS (PostgreSQL, MySQL), Azure SQL |

| Streaming Systems | Real-time data processing & event-driven apps | Apache Kafka, AWS Kinesis, Google Pub/Sub |

Some businesses use a mix of these—storing raw data in a lake, processing it in a warehouse, and sending key insights to operational databases. What matters is choosing the setup that supports your goals without overcomplicating things.

How Often Should Data Be Updated?

Alright, you’ve picked your system. Now comes the next big question of ETL design and development: How often should data be loaded?

It depends on how fast you need it.

- Real-time updates make sense for fraud detection, dynamic pricing, and live dashboards. Kafka or Kinesis are built for this.

- Batch processing (daily, weekly, or monthly) is better for reporting, trend analysis, and workloads where slight delays don’t hurt.

- Micro-batches offer a middle ground, pushing small data updates every few minutes instead of processing everything at once.

Whatever you choose, find the balance between freshness and cost. Updating too frequently can overload your system, while updating too slowly can leave you with outdated insights.

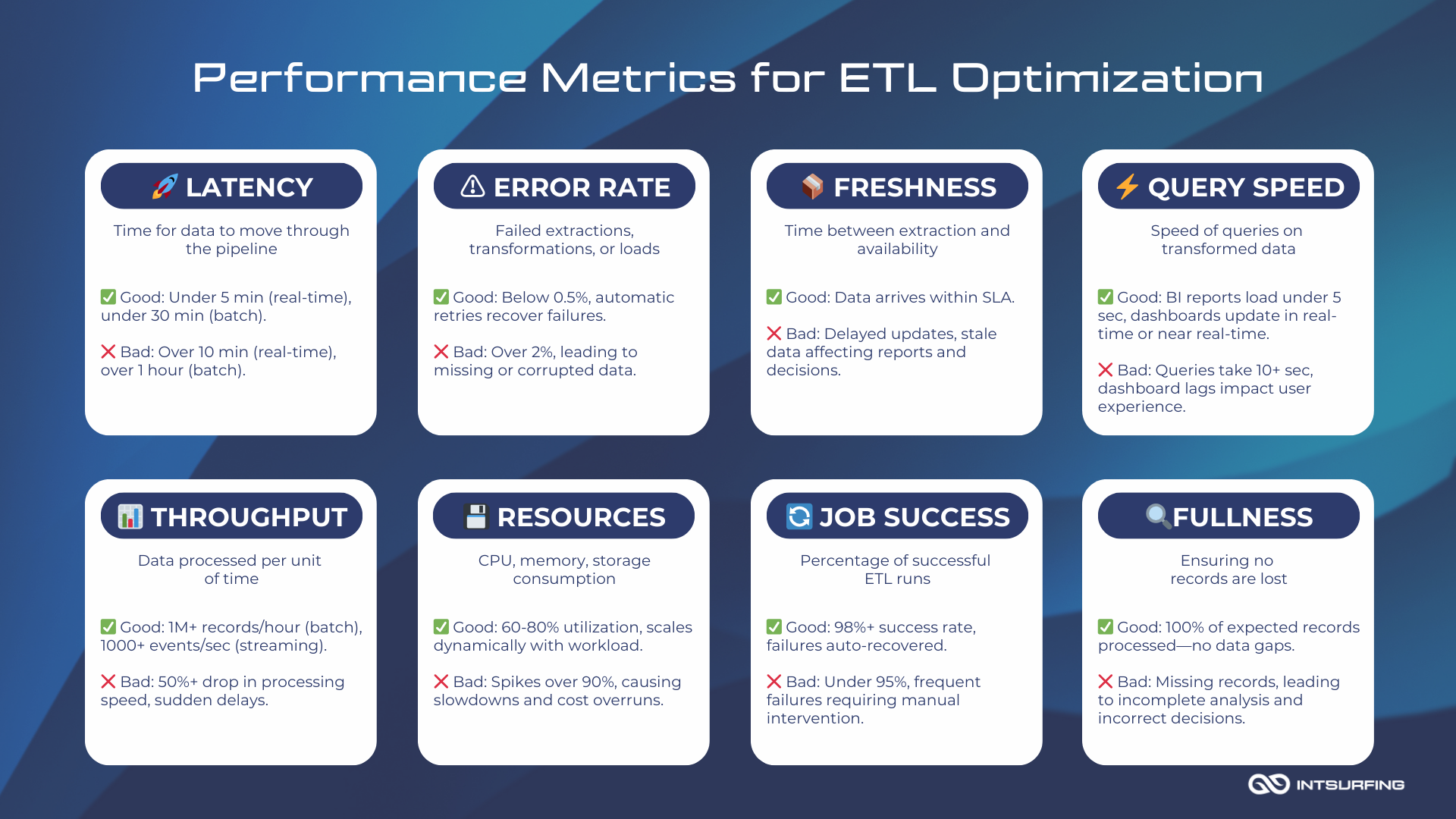

Step 6: Monitor & Optimize the ETL Pipeline

Building an ETL pipeline is one thing. Keeping it fast, reliable, and cost-effective? That’s an ongoing job. Without proper monitoring, failures go unnoticed, slowdowns creep in, and before you know it, your data isn’t as fresh or accurate as you thought.

So, how do you keep your pipeline in check? Track the right performance metrics and optimize based on what they reveal.

How to Define the Right Metrics for Your ETL

Metrics are useful only if they match your business needs and technical setup. So, how do you decide what to monitor?

- Consider the business impact. What happens if data is delayed? If reports need to be real-time, latency matters most. If dashboards run on daily updates, batch throughput is key.

- Look at past ETL challenges. Where have things gone wrong before? Frequent API timeouts? Transformation errors? Storage bottlenecks? Track the metrics that help prevent those issues.

- Balance detail with simplicity. Too many metrics = noise. Focus on 3-5 key ones that show clear trends and signal problems early.

- Automate alerts. Monitoring is useless if no one acts on it. Set up threshold-based alerts so you’re notified before things break.

Conclusion

Each step in agile ETL development serves a purpose. You define requirements to align with your goals, choose the right tools to handle scale, extract data reliably, transform it into something useful, and load it into the right system. Then, you monitor everything to keep it running. Because if one step fails, the entire pipeline suffers.