You’re deep into an ETL project, and suddenly, data discrepancies start to surface.

Reports don’t match up, and dashboards look off.

Frustrating, right?

You’re not alone.

A survey by Great Expectations found that 77% of organizations grapple with data quality issues, and 91% say these problems hurt their company’s performance.

Indeed, messy data, inefficient transformations, and system bottlenecks can cripple even the best-engineered ETL pipelines.

In this article, we’ll break down common ETL challenges developers face:

- Scalability and performance bottlenecks

- Flow orchestration & tooling issues

- Accuracy and consistency of data

- Schema evolution & data quality management

- Challenges with debugging and development

More importantly, we’ll look at practical fixes that actually work.

1. Scalability & Performance Challenges in ETL Process

You’ve probably seen it before.

Batch jobs slow down as data grows. Memory-hungry transformations push Spark to the edge. And cluster scaling lags behind demand.

Performance bottlenecks don’t just hurt job runtimes—they threaten SLAs. Late data delays reports, disrupts downstream analytics, and forces teams into reactive firefighting instead of actual engineering.

With the right tuning, better job orchestration, and a few strategic shifts in infrastructure, you can scale ETL workloads. Let’s break down the most common performance issues and how to fix them.

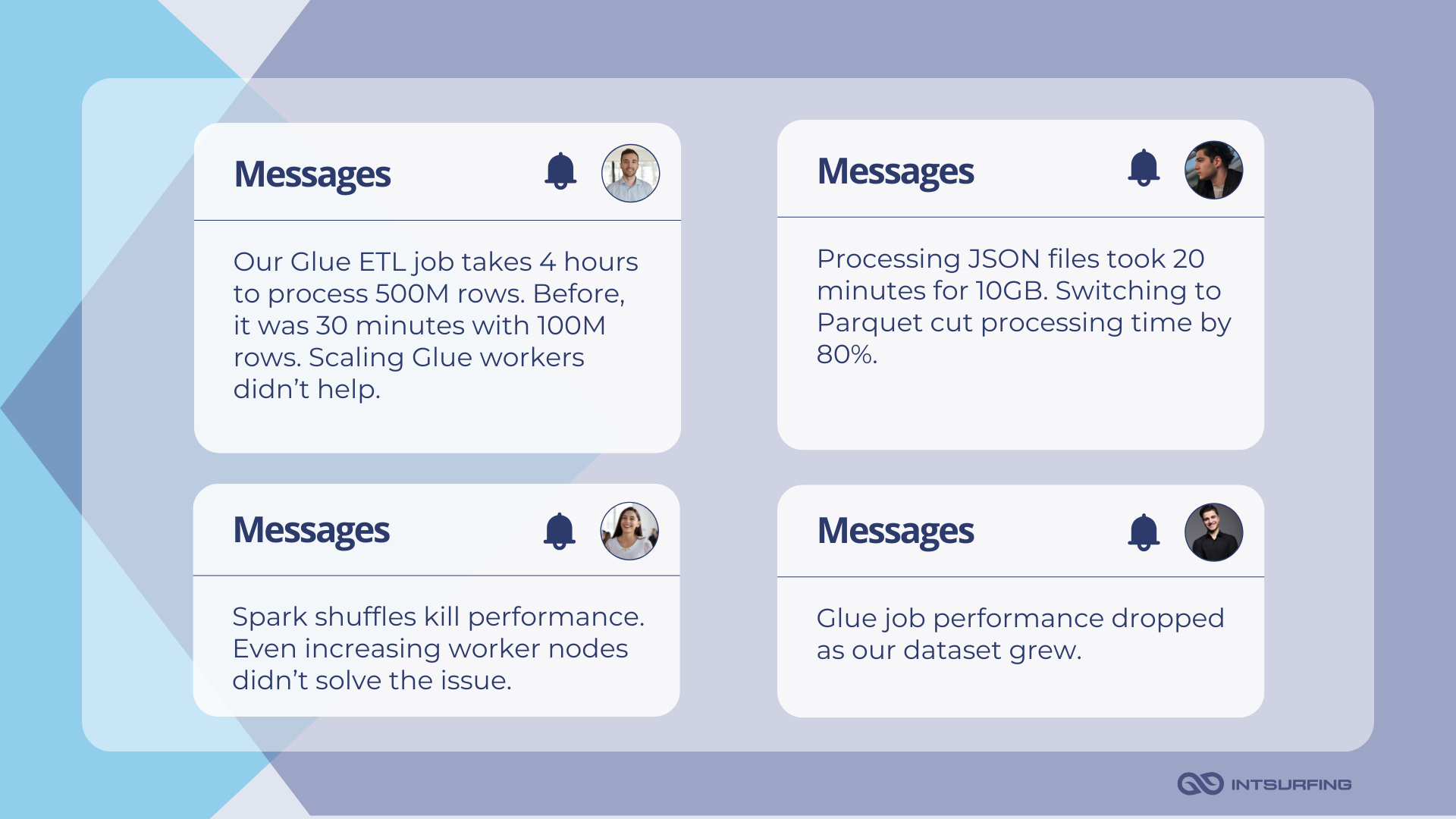

ETL Jobs Slow Down as Data Volume Grows

As datasets grow from millions to billions of records, performance bottlenecks become inevitable. Spark jobs max out CPU, disk I/O chokes, and shuffle operations bring clusters to a halt.

These slowdowns usually come down to a few issues:

- Poor partitioning. Example: A Glue job reading 1 TB of JSON files in S3 but using only 2 partitions—each worker is overwhelmed.

- Excessive shuffle operations. Example: Join operations on large datasets without bucketing or broadcast joins.

- Inefficient storage formats. Example: Using CSV/JSON instead of Parquet or ORC, which are columnar and optimized for Spark.

- Lack of incremental processing. Example: Processing the entire dataset each time instead of only new or changed records.

That’s what we hear from our clients pretty frequently:

How to fix these ETL process data challenges?

- Optimize partitioning. Instead of scanning an entire dataset, use S3 partitioning by year/month/day. Within Spark, adjust partitions dynamically to avoid overloading single tasks (

df.repartition(1000)). - Reduce shuffle operations. Use broadcast joins for small tables to prevent expensive shuffles:

Python

from pyspark.sql.functions import broadcast

df_large = df_large.join(broadcast(df_small), "id")

For large joins, bucketing can help:

df.write.bucketBy(100, "customer_id").saveAsTable("customers_bucketed")

- Switch to columnar formats. Converting JSON or CSV into Parquet before processing improves performance:

Python

df.write.mode("overwrite").parquet("s3://my-bucket/processed-data/")

- Enable incremental processing. Use AWS Glue bookmarks to process only new data instead of scanning all historical data.

High Memory Usage & Long Cluster Spin-Up Times

“Glue Spark cluster takes 10 minutes just to start. It’s killing our SLAs.”

“Getting executor lost errors when joining two 1-billion-row datasets. Not sure how to optimize memory usage.”

“Hitting the 16GB executor memory limit on Glue – job keeps failing on OOM errors.”

If you’ve ever waited for Glue to spin up a cluster, only to have the job crash on an out-of-memory (OOM) error, you know how frustrating this can be. Clusters take ages to start, jobs choke on large joins, and Spark executors vanish mid-process. Instead of processing data, you’re stuck tuning configurations and restarting failed jobs.

This happens for a few reasons.

First, AWS Glue launches a fresh Spark cluster every time a job runs. This cold start delay adds 5 to 15 minutes of dead time before the actual processing even begins. For workloads that need frequent runs, this wait time kills efficiency.

Second, memory allocation. Spark uses JVM-based memory management, which is powerful but tricky. Without proper tuning, executors either hoard memory and trigger garbage collection storms or run out of heap space entirely, leading to OOM crashes.

Third, large-scale joins make things worse. Shuffle data grows uncontrollably, spilling to disk and turning a fast in-memory operation into a sluggish disk-bound process.

So, how do you fix these issues?

- Reduce cluster spin-ip time. Instead of waiting for Glue to launch a fresh cluster, use Glue Streaming Jobs for near real-time processing or Glue Interactive Sessions to keep clusters warm between runs:

Python

!glue_start_session --worker-type G.1X --number-of-workers 10

- Optimize spark memory usage. Increasing executor memory (

spark.executor.memory → 8GB+) helps, but managing shuffle spills is just as critical. Adjustingspark.sql.shuffle.partitions = 200will prevent Spark from overloading memory with excessive shuffle operations.

For bigger workloads, switching from Standard Glue Workers (G.1X) to G.2X or G.4X provides double or even quadruple the memory per node. If Glue still struggles, offloading heavy transformations to Amazon Redshift Spectrum can shift the load from Spark to a more efficient compute layer.

Inefficient Spark jobs in AWS Glue

AWS Glue promises serverless Spark for ETL, but reality often falls short. Jobs that should be quick end up twice as slow as raw Spark on EMR. Schema mismatches from Glue Crawlers lead to broken pipelines. GlueContext, meant to simplify data handling, adds unnecessary abstraction that drags performance down.

Why do these ETL implementation challenges happen?

- AWS Glue wraps Spark with extra overhead – the GlueContext API adds performance penalties.

- Poor partition handling – Glue might scan full tables instead of only required partitions.

- Lazy transformations – Spark transformations are only triggered when actions are performed, sometimes leading to unexpected behavior.

These adjustments make Glue run like it should—fast, scalable, and efficient for real-world ETL:

- Avoid using AWS GlueContext where possible. Instead of

Python

glueContext.create_dynamic_frame.from_catalog(database="db", table_name="table")

Use raw Spark reads for better performance:

Python

spark.read.parquet("s3://my-bucket/")

- Manually define schemas instead of relying on Glue Crawlers. Glue Crawlers often misclassify types, causing processing failures down the line. Explicitly defining schemas prevents this:

Python

schema = StructType([StructField("id", IntegerType(), True), StructField("name", StringType(), True)])

df = spark.read.schema(schema).parquet("s3://my-bucket/")

For complex transformations, consider offloading heavy joins and aggregations to Amazon Redshift instead of Spark. Glue is great for ETL, but it’s not always the best tool for large-scale transformations. Job Bookmarks can also help—enabling them ensures your job processes only new data instead of scanning everything on each run.

2. Workflow Orchestration & Tooling Complexity

In ETL pipelines, workflow orchestration is a major challenge. As a developer, you need to manage dependencies, scheduling, error handling, and recovery across multiple data processing jobs. AWS provides several options—Glue Workflows, Apache Airflow (MWAA), Step Functions, and third-party tools like Dagster—but each has trade-offs.

Choosing Between Glue Workflows, Airflow, Step Functions, and Dagster

AWS does not offer a single best-in-class orchestrator for all ETL scenarios. So, you may be forced to choose between Glue Workflows, Airflow, Step Functions, and Dagster.

So, which one should you use?

| Feature | AWS Glue Workflows | Apache Airflow (MWAA) | AWS Step Functions | Dagster |

|---|---|---|---|---|

| Best for | Serverless ETL job chaining | Complex DAG-based workflows | Lightweight automation | Data-centric pipelines |

| Complexity Handling | Low | High | Medium | Medium |

| Flexibility | Low | High | Medium | High |

| AWS-Native Integration | ✅ Full AWS integration | ✅ Good AWS integration | ✅ Full AWS integration | ❌ Limited |

| State Handling | Basic | Advanced | Advanced | Medium |

| Error Handling & Retries | Basic | ✅ Best-in-class | Limited retry control | Advanced |

| Cold Start Time | ✅ Fast (<30s) | ❌ Slow (3-5 min) | ✅ Instant | ✅ Fast |

| Cost | $$ | $$$ High for always-on | $$ Pay-per-use | $$ |

Choosing the right ETL tool depends on your use case and constraints. If your goal is fast, serverless orchestration, Glue Workflows or Step Functions might be the best fit. If you need fine-grained DAG control, Airflow or Dagster could be the way to go.

Multi-Step Job Dependencies Leading to Failures

ETL workflows involve multiple dependent jobs that must run in sequence or parallel. Failures in one step can cause cascading issues.

For example:

- Job A → Job B → Job C (Job B fails → Job C never runs).

- Parallel job execution where one job completes, but another hangs indefinitely.

- Jobs dependent on external APIs (e.g., pulling from an external data source), which time out unpredictably.

This may happen for a few reasons.

Many AWS-based orchestration tools don’t handle dependencies well by default. Glue Workflows, for example, lack built-in dependency tracking, so jobs can trigger even when upstream data is missing. Step Functions allow Lambda retries, but they lack advanced failure handling for multi-step workflows.

Airflow DAGs are more flexible but introduce their own problems. Transient failures, like slow S3 reads or temporary Redshift connection issues, can cause DAG failures that shouldn’t have happened in the first place.

Without data-aware dependency tracking, pipelines often run on schedule—even if the expected data never arrived. This leads to incomplete datasets, broken transformations, and hours of debugging.

Here is how to make things work:

- Use Airflow’s

ExternalTaskSensorto enforce cross-DAG dependencies.

Python

ExternalTaskSensor(

task_id="wait_for_upstream_job",

external_dag_id="source_dag",

external_task_id="extract_task"

)

- For Step Functions, use

WaitForTaskTokento implement async job tracking. - For Glue, write job state outputs to DynamoDB and check completion status before launching downstream jobs.

- Leverage EventBridge to trigger jobs based on actual data availability instead of fixed schedules.

Limited Retry Mechanisms and Visibility into Failures

ETL jobs fail for countless reasons: network timeouts, API throttling, transient storage issues, or bad data. But AWS-native tools don’t always handle failures well. Glue retries only when the Spark job itself fails, ignoring data errors. Step Functions allow basic retries but lack dynamic retry control. Airflow retries jobs, but DAG delays mean by the time it runs again, the issue is already resolved.

You may have already dealt with these things:

- My Glue job fails on an S3 ThrottlingException, but Glue doesn’t retry.

- Airflow jobs fail due to API timeouts, but when they retry 30 minutes later, the issue is gone.

- Step Functions lack fine-grained retry control—I can’t dynamically adjust wait times based on failure type.

These issues stem from AWS’s default retry behavior. It doesn’t distinguish between system failures and bad data. A Glue job that crashes due to missing partitions isn’t the same as one that fails due to temporary network lag—but AWS treats them the same.

Lack of visibility is the other real time ETL challenge. Unless you manually configure CloudWatch, failures go unnoticed until someone checks the logs or a critical report is missing. When jobs span multiple AWS services, pinpointing where things broke becomes a nightmare.

To remedy these challenges in ETL, consider these steps:

- Use Airflow’s retry_delay & exponential_backoff to optimize transient failure handling.

task = PythonOperator(

task_id="my_task",

python_callable=my_function,

retries=5,

retry_delay=timedelta(minutes=2),

exponential_backoff=True

)

- For AWS Glue, wrap jobs in a retry-aware Lambda function.

- For Step Functions, use a Catch block with an exponential retry policy:

JSON

"Retry": [{

"ErrorEquals": ["States.TaskFailed"],

"IntervalSeconds": 5,

"MaxAttempts": 3,

"BackoffRate": 2.0

}]

- Implement centralized failure logging & alerting in AWS CloudWatch or Slack.

3. Data Consistency & Reliability Issues

Data drives decisions. But what happens when the data itself is unreliable?

According to Gartner, poor data quality costs companies an average of $12.9 million per year. Beyond lost revenue, messy data creates bigger problems—broken reports, bad predictions, and an ever-growing tangle of fixes and workarounds. The longer data inconsistencies go unchecked, the harder they become to clean up.

Many of these issues start at the ETL level. This section unpacks the biggest data reliability pitfalls in AWS-based ETL—and how to fix them before they break your business.

Late-Arriving Data

Traditional batch ETL jobs run on fixed schedules, processing only what’s available at that moment. Late data misses the window and gets left out of reports. AWS Glue and Spark often partition data by timestamps, so when late records arrive, they can end up in the wrong partition, making retrieval difficult.

Event-driven architectures like Kafka, Kinesis, and SQS aren’t immune either. Message delays caused by network lag, system downtime, or device connectivity issues can introduce inconsistencies across datasets.

Here is what may help to deal with these issues:

- Use watermarking for event time processing. Instead of processing data as soon as it arrives, introduce a watermark delay (e.g., wait for 24 hours before finalizing a batch). In Spark Streaming (via Glue), define a watermark:

Python

df.withWatermark("event_time", "24 hours")

- Late-data reconciliation with merge operations. Use Amazon Redshift MERGE to update records instead of inserting duplicates:

SQL

MERGE INTO target_table USING staging_table

ON target_table.id = staging_table.id

WHEN MATCHED THEN UPDATE SET target_table.value = staging_table.value;

If using AWS Athena, handle late data by reloading specific partitions instead of reprocessing everything.

- Event-driven reprocessing with AWS Lambda + EventBridge. Instead of running ETL on a fixed schedule, trigger incremental processing when late data arrives. Set up AWS EventBridge rules to listen for late-file arrivals in S3:

JSON

{

"source": ["aws.s3"],

"detail-type": ["Object Created"],

"detail": {"bucket": {"name": "my-bucket"}}

}

- Use Delta Lake or Apache Hudi for upsert handling. Apache Hudi enables time-travel queries to update historical data without reloading everything.

Python

df.write.format("hudi").mode("append").option("hoodie.datasource.write.operation", "upsert").save("s3://my-bucket/")

Duplicate Records

ETL pipelines often re-run due to failures, but without proper deduplication, they may insert duplicate records. Metrics become unreliable, storage costs rise, and joins across datasets start producing incorrect results.

For example, your Glue job reran after a failure, now you have duplicates in your S3 data. Or your Lambda is set to retry on failure, but it processed the same message twice.

Why is this happening?

AWS services retry failed operations by default. If a Glue job fails mid-execution and re-runs, it might insert duplicate rows instead of resuming cleanly. AWS Lambda’s automatic retries, when not configured properly, can write the same record multiple times.

Streaming ETL pipelines (Kinesis, Kafka) guarantee at-least-once delivery. That means the same event might be processed more than once, unless explicitly handled. Unlike traditional databases, S3 and Glue tables don’t enforce uniqueness, leaving deduplication entirely up to developers.

As a solution, you may try these things:

- Use Primary Keys + Upserts instead of Blind Inserts. In Amazon Redshift, use

MERGEinstead ofINSERTto update records without creating duplicates:

SQL

MERGE INTO customers USING updates

ON customers.id = updates.id

WHEN MATCHED THEN UPDATE SET customers.email = updates.email;

In Athena, deduplicate using ROW_NUMBER() before processing:

SQL

SELECT *, ROW_NUMBER() OVER (PARTITION BY id ORDER BY event_time DESC) AS rn FROM events

WHERE rn = 1;

- Deduplicate before processing in S3 pipelines. Store a manifest file with processed records or use DynamoDB to track event hashes:

Python

if dynamodb.get_item(Key={'event_id': event_id}):

return # Skip duplicate processing

4. Schema Evolution & Data Quality Management

You expect data to change. What you don’t expect is for those changes to break everything downstream.

Maybe an upstream source adds a new column, and suddenly, your Glue transformation job starts throwing type errors. Maybe a dataset arrives with columns in a different order, and now joins and aggregations are misaligned. Or maybe a Glue Crawler misinterprets a data type, and the entire pipeline starts producing inconsistent results.

Schema evolution should be a controlled process. In this section, we’ll cover the most common schema-related challenges in AWS ETL pipelines and how to handle them.

Schema Changes Breaking Transformations

AWS ETL pipelines often assume that data structures remain stable, but real-world datasets evolve over time. When this happens, ETL transformations that depend on a fixed schema can break.

Consider a Glue job that joins customer records from two different data sources. If a new column (customer_tier) is added in one source but not in the other, the ETL job may fail because Spark cannot reconcile the mismatch. Even worse, some tools silently discard unexpected fields.

This problem is particularly evident in log processing or event-based data, where new fields are frequently added. A typical example is processing clickstream data from a marketing platform, where a JSON event structure changes. A previously simple structure like this:

JSON

{

"user_id": 123,

"event": "click",

"timestamp": "2024-03-07T12:00:00Z"

}

Might evolve to:

{

"user_id": 123,

"event": "click",

"timestamp": "2024-03-07T12:00:00Z",

"utm_source": "google_ads",

"session_duration": 45

}

If the ETL job assumes only three fields exist, it will either fail or ignore the new fields. In AWS Glue, this can lead to silent data loss, because Glue’s DynamicFrames do not enforce strict schemas unless explicitly defined.

To mitigate these ETL file transform challenges, developers often:

- Use schema evolution-friendly formats, which allow new fields without breaking queries.

- Implement schema validation layers before transformations to detect breaking changes early.

Even with these measures, schema mismatches can still cause downstream data integrity issues. When using Redshift, an ETL pipeline that attempts to load new fields into a pre-existing table may fail unless explicit ALTER TABLE commands are issued beforehand. In Athena, queries can become inconsistent, as older partitions lack newly added columns.

Mismatched Column Orders Across Datasets

Column order matters more than most developers realize. Many AWS ETL jobs, particularly those processing CSVs or JSON files, assume fields appear in a consistent order. When column positions change unexpectedly, the wrong data can end up in the wrong fields—a silent failure that can go undetected for weeks.

Imagine a Glue job processing two CSV files from different years. The 2023 dataset is structured as follows:

id,name,email,age

1,John,john@example.com,30

The 2024 dataset, however, introduces a new phone_number column, but inserts it in the middle instead of at the end:

id,name,phone_number,email,age

2,Jane,1234567890,jane@example.com,28

Since AWS Glue automatically infers schemas, the job may misalign fields in Spark DataFrames:

Python

df.show()

+---+--------+-------------------+-----+

| id| name| email| age|

+---+--------+-------------------+-----+

| 2| Jane|1234567890|jane@example.com|

+---+--------+-------------------+-----+

In this case, the email field was populated with phone numbers instead of email addresses. These errors can be devastating, especially in financial or customer data processing where precision is critical.

To prevent column misalignment, developers:

- Define explicit column schemas in Spark or Glue instead of relying on auto-detection.

- Use self-describing formats like Avro or JSON (with explicit key-value pairs) instead of positional formats like CSV.

Another common issue is when data schemas differ by source. A data lake might store multiple versions of the same dataset, where older files lack fields present in newer ones. AWS Athena, for example, fails queries when reading across partitions with different schemas unless the schema evolution feature is explicitly enabled.

In such cases, schema-on-read approaches (Iceberg or Delta Lake) are preferable because they allow queries across different schema versions without breaking. Redshift Spectrum, however, requires explicit ALTER TABLE statements when new fields are introduced, making schema evolution harder to manage.

Glue Crawlers Auto-Generating Incorrect Schemas

AWS Glue Crawlers help automate schema inference by scanning data in S3 and generating table definitions in Glue Data Catalog. However, they are notoriously unreliable and can create incorrect schemas.

One of the biggest issues is that Glue Crawlers sometimes misclassify data types. Suppose an ETL pipeline stores numerical values in JSON files. The first scan might analyze:

JSON

{"temperature": 25}

Glue infers the schema as:

SQL

temperature INT

But later, new data includes decimal values:

JSON

{"temperature": 25.5}

Since Glue previously inferred temperature as INT, queries in Athena truncate decimal values instead of failing. This is especially problematic in financial applications where precision matters.

Another issue is schema merging failures. If Glue Crawlers scan multiple partitions with slightly different schemas, they sometimes:

- Ignore new columns (causing data loss).

- Generate inconsistent schemas (breaking Athena and Redshift queries).

For example, in an e-commerce dataset, Glue might crawl two partitions:

s3://my-bucket/2023/orders.json --> Schema: (id INT, price FLOAT)

s3://my-bucket/2024/orders.json --> Schema: (id INT, price FLOAT, discount FLOAT)

In this scenario, Glue sometimes fails to recognize discount as a valid column and generates an incorrect schema, causing Athena queries to ignore discount data.

To avoid Glue Crawler failures, developers often:

- Manually define schemas in AWS Glue instead of relying on auto-detection.

- Use Apache Iceberg or Delta Lake, which maintain schema evolution metadata in a more reliable manner.

A final issue with Glue Crawlers is that they struggle with deeply nested JSON structures. If an ETL pipeline processes semi-structured JSON, Glue may flatten hierarchical fields incorrectly or assign STRING data types to complex objects, forcing additional transformations in Spark.

For example, consider a dataset with nested attributes:

JSON

{

"user": {

"id": 123,

"name": "John Doe"

}

}

Glue may infer:

SQL

user STRING

Instead of creating a proper STRUCT type, leading to loss of structured query capability in Athena.

To work around this, you may need to:

- Use Spark’s

from_json()function to manually parse nested fields. - Store JSON data in Parquet format, which maintains correct data structures.

5. Development & Debugging Challenges

Unlike traditional setups, where you can run ETL scripts on your machine before deploying, AWS Glue forces you to push code to the cloud and hope for the best. If a job fails, you have to wait for logs to show up, sift through vague error messages, and guess what happened.

Glue’s version control doesn’t help, either. There’s no built-in way to track changes or roll back to a working script. If multiple developers update the same job, things get messy fast.

Debugging is slow. Collaboration is clunky. Fixing a broken ETL job takes longer than it should. Let’s break down why—and what you can do about it.

No Local Debugging Environment for Glue Jobs

For example, as you’re working on a Glue ETL job to process S3 data, you cannot simply run the script on your laptop using PySpark. Instead, you must submit the job to Glue, wait for cluster spin-up time (5-15 minutes), and then check CloudWatch logs for errors.

If a syntax error or data transformation issue is found, you must modify the script, redeploy, and repeat the entire process.

The lack of interactive debugging tools makes troubleshooting particularly painful, as you cannot set breakpoints, inspect data step-by-step, or interactively tweak transformations like they could in a local Jupyter Notebook or PyCharm.

To mitigate this, some teams set up Dockerized local Spark environments to simulate Glue’s runtime environment. This involves running:

- A local Spark cluster with similar configurations as Glue (

spark-submitwith Glue-specific dependencies). - Mocked AWS services using

motofor S3, DynamoDB, and other integrations.

Even with these workarounds, local testing does not fully replicate Glue’s behavior. As a result, developers often resort to trial-and-error debugging in Glue, which is time-consuming and inefficient.

Poor Error Messages Making Troubleshooting Difficult

Unlike traditional Spark logs that provide detailed stack traces, Glue errors are often masked by AWS-managed infrastructure, showing generic messages like “Job failed due to an internal service error” or “An error occurred while reading from S3”. These messages lack actionable details. And you’re forced to manually inspect multiple log sources to find the root cause.

A common failure scenario is a Glue job that processes large JSON files from S3. If a file contains unexpected nested structures, Glue may throw a schema mismatch error. Instead of providing details on which field caused the failure, Glue’s error message might simply state:

An error occurred while writing to the Data Catalog: Null Pointer Exception.

This message gives zero context about which record or column caused the issue. To debug, developers must:

- Check AWS CloudWatch logs, which are split across multiple log streams, making it tedious to find relevant details.

- Re-run the Glue job with sample data while enabling

INFOorDEBUGlogs (which significantly increases processing costs).

Even worse, Glue job logs are truncated after a certain size, meaning critical error details may not even appear in CloudWatch. In contrast, debugging traditional Spark jobs in on-prem or self-managed environments is easier because logs can be viewed in real-time, stack traces provide precise failure locations, and tools like spark-shell allow interactive testing.

To work around Glue’s poor logging, some teams implement custom error handling within ETL scripts. This involves:

- Adding explicit try/except blocks in PySpark transformations to capture and log errors before Glue masks them.

- Writing intermediate debugging outputs to S3 or DynamoDB so that failed transformations can be inspected outside of Glue.

However, these solutions require additional engineering effort and do not fully solve the problem.

Version Control and Collaboration Issues with Glue Scripts

AWS Glue scripts are stored in AWS Glue Data Catalog or S3. If multiple developers work on the same ETL job, there is no built-in way to track who changed what, when, or why.

For example, a company running daily Glue jobs to clean and enrich customer data might have multiple engineers modifying the same ETL script. One developer optimizes a transformation, while another introduces a new data source. Since Glue does not have native Git integration, both changes are manually uploaded to S3, with the latest upload overwriting previous work. If a bug is introduced, rolling back requires digging through historical S3 versions, which is cumbersome and error-prone.

Another issue is that Glue automatically saves script changes, meaning accidental edits get permanently stored without the ability to revert. This is particularly problematic in enterprise environments, where changes should go through proper code review and approval processes before deployment.

To mitigate these issues, some teams:

- Manually copy Glue scripts into Git repositories, which adds overhead and does not integrate natively with Glue workflows.

- Use AWS CodeCommit with Glue, which allows version control but lacks direct integration with the Glue UI, making it difficult to sync changes automatically.

Conclusion

If ETL pipelines were easy, we wouldn’t be talking about ETL challenges and solutions. Jobs slow down. Data gets messy. Errors make no sense. AWS gives you the tools, but it’s up to you to make them work. That means designing pipelines that handle schema drift, late data, and reprocessing without breaking. It means logging everything, testing changes before deploying, and choosing the right tool for the job.

Don’t wait until things fall apart. Fix what slows you down the most. Automate where you can, document what you build, and track every change. A pipeline that runs today isn’t good enough—it has to keep running tomorrow, too.