Businesses generate and collect massive amounts of data. In 2024, this number has reached 149 zettabytes.

But raw data alone doesn’t do any good.

To make it valuable, you need to clean, organize, convert, or enrich it. Only then does it become fit for your business objectives.

Just for these purposes, extract, transform, load tools exist.

In this guide, we’ll break down the leading ETL tools of 2025 and how they help businesses streamline data processes.

What Are the ETL Tools?

ETL tools are software that handle Extracting, Transforming, and Loading of data. As the ETL tools definition suggests, they collect raw data from the desired sources, refine and structure it, and load it into a target destination.

The ultimate goal is to make data usable for analysis, reporting, decision-making, or other cases. By automating what would otherwise be a time-consuming manual process, ETL tools save time, reduce errors, and improve data accuracy.

Let’s break it down with an example.

You run an e-commerce business and want to analyze customer reviews from a product review website. Here’s how an ETL tool could help.

- Extract. The ETL tool connects to the review website through an API. It pulls review titles, customer ratings, and timestamps. This data is unstructured and scattered across the pages.

- Transform. ETL pipeline tools filter reviews to focus only on products sold on your platform and standardize date formats. Sentiment analysis algorithms are applied to categorize reviews as positive, negative, or neutral. Irrelevant data points are removed.

- Load. The cleaned review data is loaded into your data warehouse or analytics dashboard.

Now, you can generate visual reports to spot areas for improvement or product highlights.

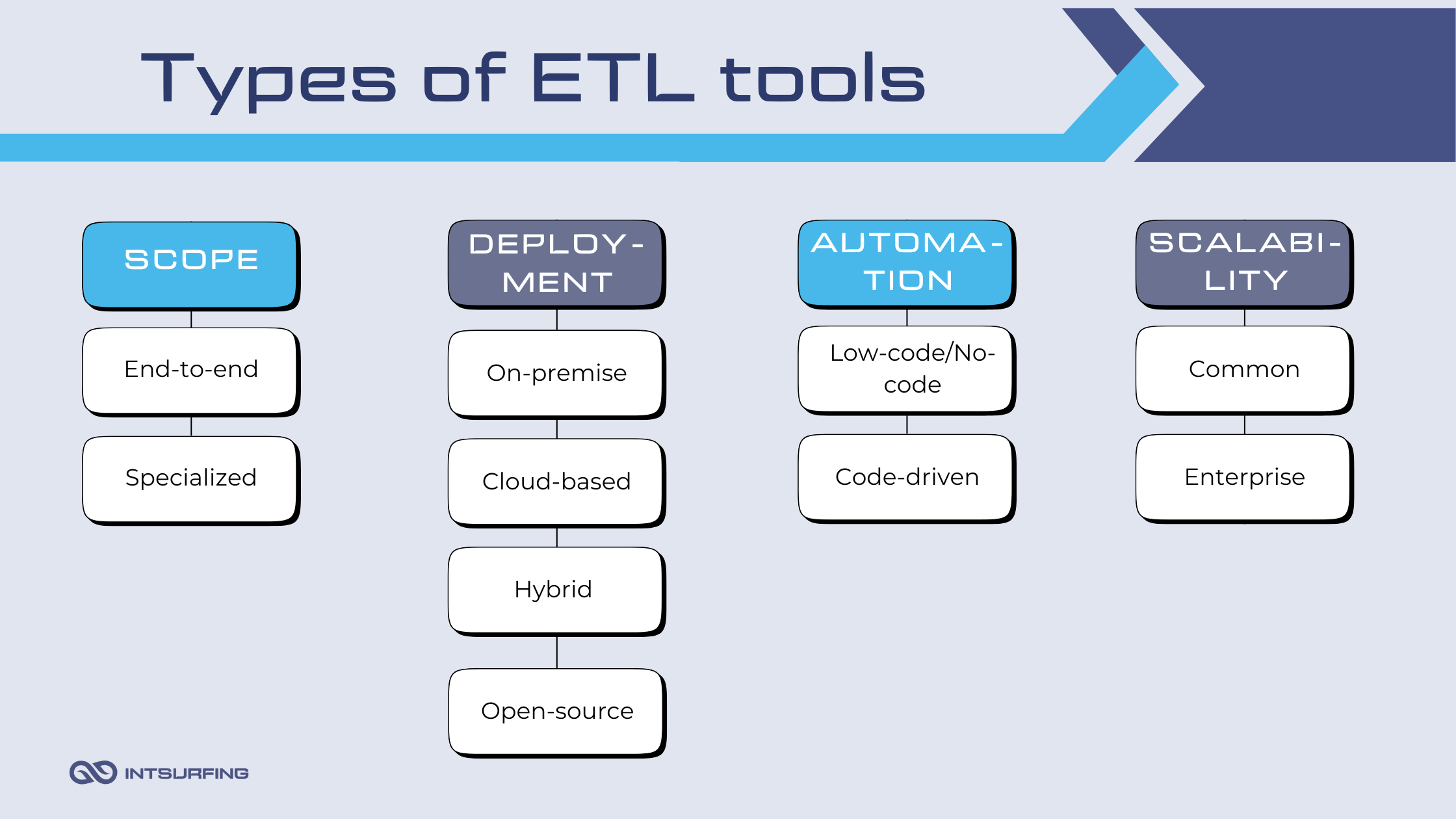

Types of ETL Tools

Some tools handle the entire ETL process end-to-end. Others focus on just one step.

Which is better?

It all comes down to your business needs.

End-to-end ETL tools for big data are perfect if you want simplicity and fewer moving parts. They manage the entire workflow and offer a unified solution that’s easier to set up and maintain.

Specialized tools are ideal if you prefer flexibility or already have systems in place for certain tasks. You can pick the best tool for each step and, this way, create a custom ETL pipeline.

Also, there are other groups of extract, transform, load ETL tools based on:

Let’s look into these in more detail.

Based on Deployment

When categorizing good ETL tools by deployment, the options typically fall into four categories: On-premise, Cloud-based, Hybrid, and Open-Source.

On-Premise

These are installed and run on your organization’s local servers or data centers. They are well-suited for companies with strict data security and compliance requirements.

Features:

- Full control over the infrastructure, configurations, and security.

- Works well with large-scale, legacy systems that are not cloud-compatible.

- Requires substantial hardware and IT team support for maintenance and upgrades.

Cloud-Based

ETL tools for cloud operate on AWS, Azure, or GCP. They eliminate the need for physical hardware. Thus, cloud-based ETL tools are ideal for businesses prioritizing agility and cost efficiency.

Features:

- Native support for cloud ecosystems.

- Real-time data processing and auto-scaling for high-volume tasks.

- Pay-as-you-go pricing models reduce upfront costs.

- Integration with cloud-native services (S3, BigQuery, or Snowflake).

Hybrid

This setup combines the capabilities of on-premise and cloud-based solutions. Thus, it allows businesses to process data across both environments. This is especially useful for organizations transitioning to the cloud or dealing with hybrid infrastructure setups.

Features:

- Integration across multiple environments.

- Synchronization between on-premise databases and cloud-based systems.

- Ensures compliance for sensitive data while leveraging the scalability of the cloud.

Open-Source

Open source etl tools are freely available. You can modify, share, and use the source code without licensing fees. They are often used by startups, academic institutions, or tech-savvy teams who value customization and control over their ETL workflows.

Features:

- No licensing costs.

- Users tweak the tool to their exact needs.

- Supported by developer communities rather than commercial vendors.

Based on Automation Level

Automation level is an important factor when choosing an ETL solution. It determines how much technical expertise is needed to set up, maintain, and scale your processes.

ETL automation tools can be broadly categorized into Low-Code/No-Code and Code-Driven tools.

Low-Code/No-Code ETL software allows users to create ETL pipelines through graphical interfaces without writing extensive code. It’s ideal for teams with limited programming expertise or businesses looking to deploy solutions quickly.

Features:

- Users design ETL pipelines through GUI-based tools using flowcharts or drag-and-drop components.

- Integration with popular databases, APIs, and file systems without additional coding.

- Dynamically adjusts to handle varying data loads.

- Quick deployment with minimal configuration, ideal for time-sensitive projects.

Code-Driven automated ETL tools require developers to write scripts to build and manage ETL workflows. They are well-suited for complex, large-scale, or highly specialized data pipelines.

Features:

- Full control over ETL logic, transformations, and workflows.

- Many code-driven tools are open-source.

- Optimized for processing massive datasets across distributed systems.

- Integration with CI/CD pipelines.

Based on Scalability

There are two main categories based on scalability: Common and Enterprise-grade solutions. The primary difference lies in their ability to manage increasing data loads, advanced functionality, and integration capabilities.

Common ETL extraction, transformation, loading tools are meant for small to medium-sized businesses or use cases where data volumes and complexity are moderate. They provide essential ETL functionalities without requiring extensive infrastructure or high upfront investment.

Features:

- Limited to processing small-to-medium datasets.

- Prebuilt connectors for popular data sources and destinations.

- Straightforward deployment and user-friendly interfaces.

- Suitable for periodic or batch data processing.

Enterprise ETL solutions handle large-scale, complex data environments. They offer advanced features: distributed processing, real-time capabilities, and multi-cloud integration.

Features:

- Built for processing massive datasets across distributed systems.

- Support for real-time data streaming and event-driven workflows.

- Extensive security, compliance, and governance features.

- Integration with advanced analytics, AI, and machine learning platforms.

- Scalable infrastructure for handling fluctuating data loads.

Here is how these two types compare.

| Feature | Common Solutions | Enterprise-Grade Solutions |

|---|---|---|

| Data Volume | Moderate | Massive (petabytes and more) |

| Processing | Batch | Batch, real-time, and event-driven |

| Deployment | Simple setup | Distributed, multi-cloud |

| Advanced Features | Basic transformations | AI/ML integration, metadata automation |

| Cost | Affordable | Higher, but value scales with data size |

| Use Cases | Small to medium businesses | Global enterprises with big data needs |

How to Choose the Best Tools for ETL

Every organization operates with a unique business model and culture, and the data it collects reflects this. While your data needs and goals will vary, there are common criteria you can use to evaluate ETL tools. They are outlined below.

- Business requirements. Identify your use case—whether you need batch processing, real-time streaming, or both. Ensure the tool integrates with your data sources and supports your data destination to avoid compatibility issues. Additionally, evaluate the volume of data it can handle.

- Deployment method. Determine whether your organization prefers an on-premise, cloud-based, or hybrid deployment model. The right choice depends on your infrastructure, compliance requirements, and future scalability goals.

- Scaling needs. Choose a tool that can adapt to growing data volumes, adding more sources, or supporting more complex transformations as needed.

- Performance. Evaluate the tool’s speed, reliability, and resources during ETL operations, particularly for large or real-time datasets. High-performing tools prevent bottlenecks and ensure data availability.

- Technical literacy. Consider the technical proficiency of your team. A highly technical team may benefit from code-driven tools, while non-technical teams might prefer low-code/no-code solutions. Also, evaluate the training required to onboard your team. Tools with intuitive interfaces or extensive documentation may reduce the time to proficiency.

- Integration capabilities. Ensure the software supports pre-built connectors for your most critical systems. For custom integrations, check whether it allows easy API-based connectivity.

- Cost. Understand how the tool is priced—subscription-based, pay-per-use, or open-source. Factor in additional expenses (setup, training, and maintenance costs) as these can affect the overall ROI of your ETL investment.

ETL Tool List in 2025: Best Tools for ETL

In this section, we’ll list ETL tools that are popular in 2025. We’ll highlight their core functionalities, ideal use cases, and what sets them apart.

Comparison of ETL Tools

Below, we have compiled a visual table with the features, pricing, and ideal use cases for all tools. You can now refer to this quick comparison to identify the top ETL tools.

| Tool | Key Features | Pricing | Ideal Use Cases |

|---|---|---|---|

| Nannostomus | Customizable web scraping, cloud-based, microservices architecture | License-based | Data extraction |

| Apache Airflow | Workflow orchestration, DAGs for task management, Python-based customization | Open-source | Complex workflows, large-scale pipeline orchestration |

| Informatica PowerCenter | Enterprise-grade, metadata management, advanced transformation capabilities | Subscription-based for enterprise features | Large enterprises requiring advanced transformations and governance |

| Apache NiFi | Real-time streaming, drag-and-drop interface, data flow monitoring | Free and open-source; infrastructure costs apply | Real-time data streaming for IoT, telecom, and e-commerce |

| Talend | Integrated data quality tools, big data support, over 900 connectors | Free open-source version; subscription for advanced features | Businesses prioritizing data quality and big data integration |

| IBM DataStage | Parallel processing engine, multicloud integration, metadata tracking | Subscription-based, tailored to enterprise needs | Enterprises with high data volumes in multicloud environments |

| AWS Glue | Serverless, automatic schema discovery, real-time processing | Pay-as-you-go based on data processed and runtime | AWS users with scalable, cloud-native ETL needs |

| Airbyte | Open-source, AI-driven connector creation, reverse ETL support | Free open-source; enterprise edition available | Customizable ETL for AI workflows and unstructured data |

1. Nannostomus

Nannostomus is a cloud-based ETL tool focused on data extraction. Built on a microservices architecture, it provides unparalleled scalability and fault tolerance. With its help, you can pull data from complex and dynamic sources (e-commerce platforms, social media websites, and so on). Nannostomus offers a C# code library with pre-built code snippets, so even junior developers can create efficient data extraction bots. Besides, the tool includes a console that balances the load across virtual machines. To accelerate accelerates data gathering for large-scale projects, the software has website batch processing.

Key features:

- Microservices architecture

- Customizable scraping rules

- C# code library for building scrapers

- Cloud-based infrastructure

- Supports workflow orchestration, including scheduling and event-based triggers

- Optimizes resource usage with load balancing

- Batch processing support

Pricing: A license-based model.

Nannostomus is perfect for businesses that rely on large-scale data extraction and transformation from web-based sources.

2. Apache AirFlow

Apache Airflow is an open-source platform. While it is not a standalone ETL tool, it excels at managing dependencies and automating workflows through its Directed Acyclic Graphs (DAGs). Fully programmable in Python, Airflow offers extensive flexibility and integration capabilities. This makes it a favorite for tech-savvy teams handling large-scale, distributed workflows.

Key features:

- DAGs visualize task sequences and dependencies

- Supports custom operators and integration with databases, APIs, and external tools

- Built-in support for periodic and event-driven task execution with robust retry mechanisms

- Free to use, backed by a large community offering plugins, updates, and support

Pricing: Free to use.

Apache Airflow is an excellent choice for orchestrating complex workflows and automating dependencies across data pipelines.

3. Informatica PowerCenter

ETL Informatica tool is an enterprise-grade solution for handling high data volumes and complex transformations. It’s a favorite among large organizations handling complex and has been on the Gartner list of ETL tools for 11 years straight. Its

extensive library of transformation functions makes it accessible to both technical and semi-technical users. However, the tool’s robust capabilities can make it resource-intensive, with high licensing fees, a steep learning curve for advanced features, and significant infrastructure requirements for optimal performance. Additionally, while Informatica has made strides in cloud integration, PowerCenter remains primarily an on-premise solution.

Key features:

- Stable and reliable for large-scale ETL operations with complex transformations.

- Offers extensive pre-built connectors for databases, cloud platforms, ERP systems, and more.

- Drag-and-drop functionality for designing workflows with a clear visual representation of data flows.

- Comprehensive support for data cleansing, deduplication, and validation.

- Role-based access controls, metadata management, and data masking for compliance.

- Built-in tools for workflow monitoring and generating detailed reports.

Pricing: A volume-based pricing model.

As such, it’s best suited for organizations with substantial budgets and long-term data integration requirements.

4. Apache Nifi

Apache NiFi is an open-source tool for automating and managing data flows between systems. It’s fantastic for handling real-time streaming data, giving you precise control over how data is routed, transformed, and prioritized. With built-in processors, it’s easy to connect to databases, APIs, and even IoT devices. Plus, the intuitive interface lets you monitor and track your data flows in real time. While it’s simple and effective for smaller workflows, things can get tricky with larger deployments.

Key features:

- Web-based interface for building and managing pipelines.

- Supports continuous streaming for scenarios requiring fast data collection and processing.

- Offers granular control over how data is routed, transformed, and prioritized.

- Pre-configured components for connecting to databases, APIs, IoT devices, and file systems.

- Intuitive interface for tracking data flows, with visibility into processing stages.

- Distributed architecture allows scaling for larger workflows across clusters.

Pricing: A freeware ETL tool.

Apache NiFi is well-suited for organizations managing diverse and dynamic data sources, particularly those requiring real-time data streaming.

5. Talend

Talend stands out for its ability to unify data integration with data quality and governance in a single solution. Automated data cleansing, validation, and deduplication are integrated into the workflow, so your data is moved and improved. The cloud-native architecture of the ETL Talend tool is another differentiator, so your team will be able to build scalable pipelines that handle both batch and real-time data processing. Additionally, the platform’s big data connectors allow users to directly integrate with Hadoop, Spark, and Snowflake.

Key features:

- Automated cleansing, validation, and deduplication embedded into ETL workflows.

- Enables deployment on AWS, Azure, and Google Cloud.

- Direct connectors to Hadoop, Spark, and Snowflake.

- Combines batch and streaming data pipelines in a unified interface.

- Adapts to evolving data structures without requiring major workflow redesigns.

- Includes tools for creating, managing, and integrating APIs.

Pricing: Talend offers an ETL free tool (Talend Open Studio) for basic ETL needs and tiered subscription plans for more advanced enterprise features.

Talend is an excellent choice for businesses migrating to the cloud or working on big data initiatives.

6. IBM DataStage

If you need a tool to move, transform, and clean up data across different systems, IBM DataStage is built for the job. With this tool, you can handle large data volumes, whether you’re working on the cloud, on-premises, or both. Its standout feature is a parallel processing engine that makes heavy data tasks faster and more efficient. Plus, with built-in tools for data quality and metadata tracking, you can trust your data is reliable and well-organized. While it’s incredibly powerful, you’ll need a skilled team to set it up and get the most out of it.

Key features:

- High-performance parallelism for handling large data volumes.

- Operates across on-premises, hybrid, and multicloud infrastructures.

- Prebuilt connectors for various cloud and on-premises data warehouses, including IBM Netezza and IBM Db2 Warehouse SaaS.

- Automatic resolution of data quality issues during ingestion with IBM InfoSphere® QualityStage®.

- Enables data lineage tracking and policy-driven data access with IBM Knowledge Catalog.

- A user-friendly, machine learning-assisted interface.

- Supports automated workflows for transitioning from development to production.

- Distributed data processing and automated load balancing.

Pricing: S subscription model, with costs based on the features and deployment you choose.

IBM DataStage is perfect for large businesses that need to manage a lot of data across complex systems. It’s especially great if you care about data accuracy and tracking.

7. AWS Glue

With AWS Glue, you can discover, prepare, and integrate data for analytics, machine learning, and application development—all without managing infrastructure. This serverless ETL tool automatically scales resources, so you don’t have to worry about provisioning or maintenance. It has an automatic schema discovery tool and a centralized Data Catalog that simplifies organization and understanding of your data. AWS Glue also supports real-time streaming data. While it excels in cloud environments and integrates with AWS services, it may not be the best fit for on-premise needs or businesses new to the AWS ecosystem.

Key features

- No infrastructure management—resources scale automatically as needed.

- A centralized repository to store metadata and manage ETL environments.

- Crawlers detect data structure and populate the Data Catalog with metadata.

- Flexibility to support both Extract, Transform, Load and Extract, Load, Transform patterns.

- Connects to Amazon S3, RDS, Redshift, third-party databases, and SaaS applications.

- AWS Glue Studio makes it easy to create and manage ETL jobs visually.

- Write ETL scripts in Python or Scala for more advanced transformations.

- Visual interface for data cleaning and preparation without writing code.

- Real-time data integration

Pricing: AWS Glue follows a pay-as-you-go pricing model, charging based on the amount of data processed and the time ETL jobs run.

AWS Glue is best suited for businesses already using AWS services and needing a scalable, serverless ETL solution. It’s a perfect fit for organizations that deal with real-time data processing, cloud-native workflows, or require seamless integration with other AWS tools.

8. Airbyte

Airbyte is an open-source data integration platform that has recently enhanced its capabilities with AI-driven features. With Airbyte, you can connect to a vast array of data sources and destinations, utilizing its extensive library of over 550 prebuilt connectors. A standout feature is the AI Assistant, which automates much of the setup process for custom connectors. Additionally, Airbyte’s focus on unstructured data integration enables you to process and analyze unstructured and semi-structured data, which is particularly beneficial for AI and machine learning applications. While these AI enhancements offer powerful new functionalities, they are currently in beta and may require technical expertise to fully leverage.

Key features:

- AI assistant automates the creation of custom connectors.

- Access to over 550 prebuilt connectors for diverse data sources and destinations.

- Unstructured data integration.

- The open-source framework offers flexibility for customization and community-driven enhancements.

- Reverse ETL support enables data movement from warehouses back into operational tools.

- Modular architecture supports easy updates and scalability as your data needs grow.

- Docker-native deployment.

Pricing: Airbyte is a free ETL tool, with an enterprise edition available.

Airbyte is particularly well-suited for organizations aiming to integrate AI and machine learning workflows into their data operations.

Wrapping It Up

Picking the right ETL tool can make a big difference in how smoothly your data workflows run. To find the best fit, take a close look at what your business needs—whether it’s scalability, real-time processing, or integration with your existing tools.

Explore these tools, test their features, and see which one works best for your goals.