Businesses are surrounded by more data than ever before.

But here is the thing.

Some data sits comfortably in internal systems—CRMs, ERPs, and databases.

Other insights come from external sources—websites, social media, or third-party platforms.

To make things even more complex, much of this data is scattered across formats: structured spreadsheets or unstructured PDF reports.

As you want to make use of this data, you need it all in one place. Here, you can’t do without a properly implemented ETL flow.

In this article, we’ll break down the basics of ETL, covering:

- What ETL is and how it works

- Why it’s vital for your business

- Real-life examples of extraction, transformation, and loading

Let’s get into it.

What Is an ETL Process?

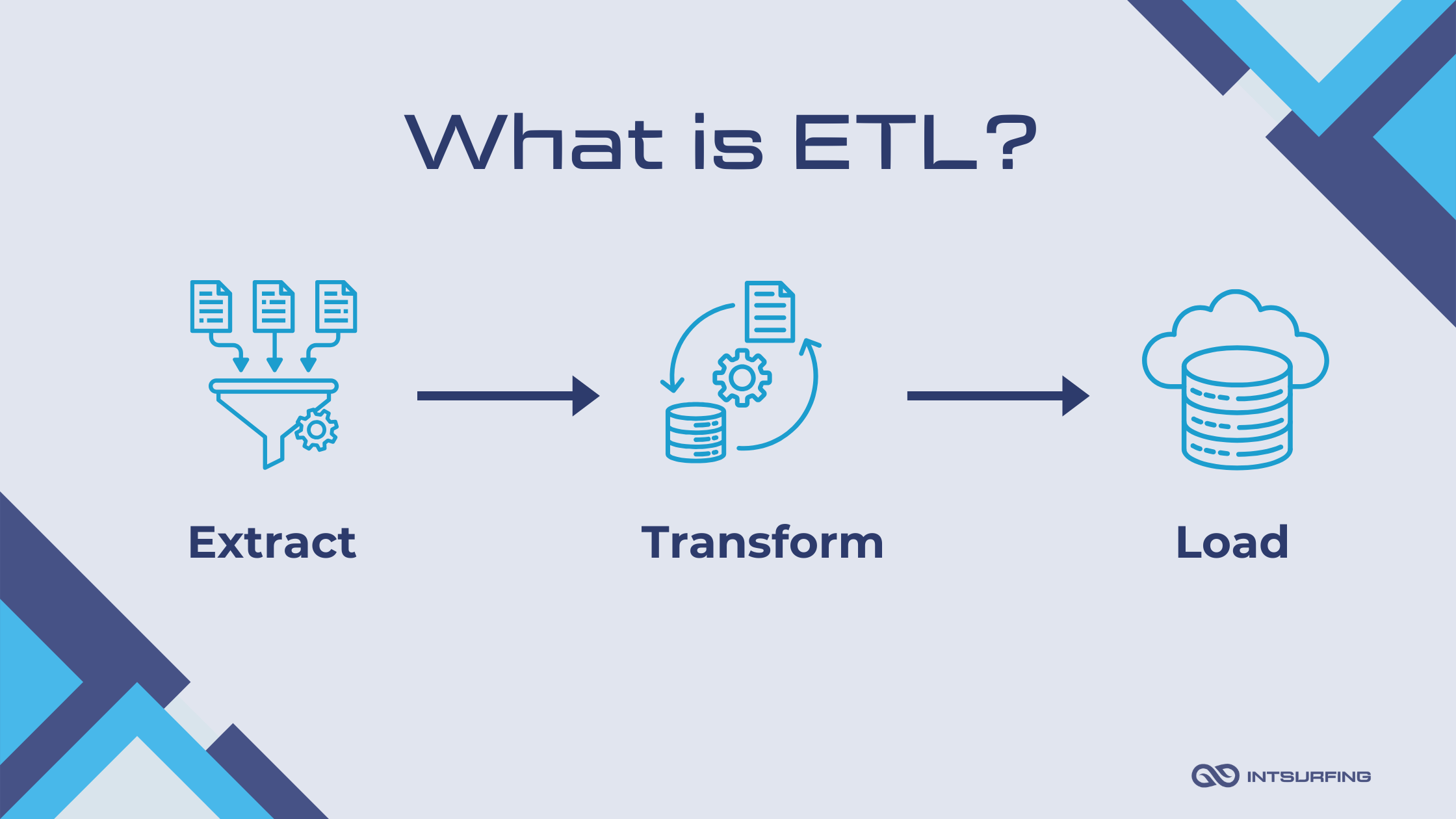

ETL stands for Extract, Transform, Load. It means gathering data, converting it into a usable format, and placing it into a central storage system.

Businesses across industries rely on ETL for a variety of purposes. Retailers use it to consolidate sales data from e-commerce platforms and physical stores for better inventory management. Financial institutions apply ETL to aggregate customer transaction data and detect fraud patterns. In healthcare, providers use ETL to merge patient records to create comprehensive medical histories.

What Is ETL in Data Warehousing?

Simply put, ETL is the mechanism that powers data warehouses. A data warehouse is a centralized system for storing and analyzing large volumes of data. In this context, ETL helps businesses aggregate information from disparate systems and standardize it so that it becomes ready for use.

What Is an ETL Developer?

An ETL developer is a professional responsible for designing, building, and maintaining the ETL pipelines. ETL developers work at the intersection of data engineering and analytics. Their responsibilities typically include:

- Developing ETL pipelines

- Data quality management

- Performance optimization

As you got the understanding of the ETL extract, transform, load definition, let’s look at how this process works.

How Does ETL Extraction, Transformation, Loading Work?

The ETL process is made up of three steps: Extraction, Transformation, and Loading. In the following sections, we’ll break down each of them to explore how they work and why they are crucial for effective data management. Let’s start with extraction.

Data Extraction in ETL

Data extraction is the first step in the ETL flow. It involves retrieving raw data from databases, APIs, flat files, cloud storage, websites, or other sources at your hand.

Businesses deal with an overwhelming variety of data—customer feedback on review sites, performance logs from servers, or supply chain updates from external partners. Extraction brings all of this together. Its goal is to gather the information so it can be transformed and analyzed.

Where Does Data Come From in Data Extraction?

You can pull information from a wide range of sources, both internal and external.

Internal sources are systems and tools your organization uses daily. These include:

- Databases: SQL, NoSQL.

- Cloud-based systems: Amazon RDS.

- Enterprise tools: CRMs (e.g., Salesforce) or ERPs (e.g., SAP).

- Data lakes where raw data is stored in its native format.

To extract data from these sources, ETL pipelines typically use built-in APIs, SQL queries, or connectors. For example, a retail company might extract sales figures from its SQL database using automated SQL scripts.

External sources are often unstructured or semi-structured and require more effort to extract. These include:

- Websites

- Third-party platforms

Extracting data from these sources usually requires specialized tools. They are known as web scrapers. They navigate through pages, identify the data you need, and pull it for further processing.

Types of Data Extraction in ETL

There are full, incremental, hybrid, and real-time data extraction, based on how data is retrieved from the source systems. Here’s an overview of each approach:

|

Type |

Definition |

When to use |

Pros |

Cons |

|---|---|---|---|---|

|

Full extraction |

Retrieves all data from the source system in one go, regardless of changes. |

Small datasets. When there's no mechanism to track changes in the source data. For initial loads or periodic full backups. |

Simple to implement. Ensures complete data extraction. |

Resource-intensive. May lead to redundant data. |

|

Incremental extraction |

Extracts only the data that has been added or changed since the last extraction. |

Large datasets where extracting all data every time would be inefficient. Sources with mechanisms to identify changes (e.g., timestamps, version numbers, or change logs). |

Reduces data transfer and storage costs. |

Requires robust mechanisms to detect changes. Risk of missing updates if improperly configured. |

|

Hybrid extraction |

Combines full extraction with incremental updates based on system requirements. |

When datasets are partially static and partially dynamic. |

Offers flexibility to adapt to different data needs and system constraints. |

Higher complexity in setup and management. May require more advanced tools. |

|

Real-time extraction |

Continuously extracts data in near real-time as changes occur in the source system. |

When businesses need up-to-the-minute data for time-sensitive processes (stock trading or monitoring IoT devices). |

Provides the most current data. |

Demanding on system resources and network bandwidth. Prone to delays or errors in high-traffic systems. |

Selecting the best method among these types of extraction in ETL depends on the needs of your project, the structure of your data, and the goals of your organization.

If you’re unsure which type of data extraction is right for your project, it’s best to consult an expert. At Intsurfing, we specialize in ETL and data pipelines. We’ll help you assess your data, understand your objectives, and recommend the best approach to ensure your project’s success.

Challenges of Extraction in the ETL Pipeline

Many projects encounter hurdles at the first stage of extraction, transformation, and loading ETL that can delay timelines, inflate budgets, or compromise data quality. Let’s explore these challenges:

- Data heterogeneity. Extracting data from diverse sources with varying formats (e.g., relational databases, APIs, flat files, NoSQL databases, IoT devices).

- Incomplete or inconsistent data. Source systems may contain missing fields, null values, or inconsistencies in data quality.

- Access and permission restrictions. Limited access to source systems due to security policies, compliance regulations, or incompatible systems.

- Performance and system load. Extracting large volumes of data can strain source systems, especially during peak usage times.

- Change tracking in source systems. Difficulty detecting and capturing only updated or newly added records, especially in systems without change data capture (CDC) capabilities.

- Network latency and connectivity. Slow or unstable network connections during extraction, especially for cloud-based or geographically distributed sources.

Versioning and schema changes. Source systems often undergo updates that change schemas, APIs, or file formats.

Data Transformation in ETL

Raw data from different sources is rarely ready to be analyzed. It might have inconsistencies, duplicates, or irrelevant information. Transformation ensures all the data adheres to a unified format and structure and is it compatible with the target system.

Here’s how data transformation adds value:

- Improves data quality. It eliminates errors, removes duplicates, and fills missing values.

- Standardizes formats. It aligns data into a common format—for instance, standardizing date formats, currencies, or measurement units.

- Enhances usability. Aggregating and organizing data makes it easier to analyze. For example, summarizing daily sales figures into monthly reports.

- Supports advanced analytics. Enrichment processes can provide deeper insights for business decisions.

Let’s imagine a marketing agency extracting data from e-commerce websites to analyze pricing, customer reviews, and competitor product offerings. Here, the data might arrive in various formats.

One website provides JSON files through an API.

Another requires scraping HTML pages.

And yet another stores product details in downloadable CSV files.

Additionally, the data might have inconsistencies—prices displayed in different currencies, date formats varying by region, and customer ratings represented as stars on one site and percentages on another.

Through data transformation, the agency can standardize all currencies into USD, unify date formats, and convert ratings into a single scoring system.

Types of Transformation in ETL

Depending on the data sources, the structure of the target system, and the business requirements, different types of transformations may be applied. Their goal is always the same: to ensure the data is accurate, consistent, and usable.

These transformation types can be used individually or combined to address the unique challenges of each project:

- Data cleaning. Removes errors, duplicates, and inconsistencies from the dataset. For example, eliminating blank fields, correcting misspelled entries, or removing redundant records.

- Data standardization. Ensures data follows a uniform format. This could involve aligning date formats, standardizing units of measurement, or converting currencies into a common one.

- Data filtering. Removes irrelevant or unnecessary data. For instance, filtering out customers with inactive accounts or excluding sales records from non-target regions.

- Data aggregation. Summarizes or consolidates data for better analysis. For example, daily sales figures can be aggregated into monthly or yearly totals.

- Data enrichment. Adds additional information to make the data more valuable. For example, appending geolocation data to IP addresses or adding weather data to sales records.

- Data deduplication. Identifies and removes duplicate records. This is crucial when data is pulled from sources that might overlap, such as customer lists from different departments.

- Data validation. Checks the accuracy and quality of the data to ensure it meets predefined standards. For example, validating email formats or ensuring all required fields are filled.

- Data conversion. Converts data to match the schema and format of the target system. This might involve converting JSON data into a relational format or reshaping unstructured data into structured tables.

- Data masking. Protects sensitive information by anonymizing or obscuring it. For example, masking credit card numbers or personally identifiable information (PII) to comply with privacy regulations.

- Data sorting and reordering. Rearranges data to improve usability or meet system requirements. For example, sorting customer names alphabetically or reordering fields to match a predefined schema.

Challenges of Data Transformation in ETL Process

Transforming data with inconsistent formats is a significant technical hurdle. Integrating data from relational databases, NoSQL systems, JSON files, and unstructured text into a unified format requires advanced technical skills and careful schema design. The stakes are high for businesses where delayed workflows can mean missed opportunities. For example, if a retailer’s transformation pipeline is slow, it might fail to update inventory insights in time for peak shopping periods.

Preserving data quality and integrity adds another layer of complexity. Errors in transformation logic—introducing duplicates, losing data fields, or performing incorrect calculations—can compromise reporting accuracy and lead to flawed strategies. Poor-quality data undermines trust in the pipeline and can result in wasted resources.

On top of that, embedding complex business rules can be a real headache. Whether it’s converting currencies, applying region-specific tax rules, or mapping data for compliance, these steps require both technical know-how and industry expertise.

And let’s not forget that data sources change all the time. Schema updates or new APIs can easily break your pipelines, leaving you scrambling to fix issues and delaying access to the insights you need.

Loading Process in ETL

Data loading is the final step in the ETL process, where transformed data is moved into its destination system. It’s where all the preparation work pays off, as the data becomes centralized and structured in a way that aligns with the organization’s analytical or operational goals.

For example, an e-commerce company might load cleaned and standardized sales data into a data warehouse. This allows business analysts to generate reports and optimize marketing strategies. Similarly, a logistics company might load real-time delivery data into a cloud-based system for route optimization.

Destination Systems and Their Requirements

The choice of destination system in your ETL extract transform and load pipeline is critical because it determines how accessible, scalable, and useful the data will be for the organization.

Different target systems have unique requirements for structure, format, and accessibility. For instance, a data warehouse (Snowflake or Amazon Redshift) stores structured data optimized for querying and reporting. To load data into these systems, it must adhere to predefined schemas.

A data lake (AWS S3 or Azure Data Lake) can handle raw, unstructured data, but it still requires metadata tagging and organization to make the data discoverable and usable.

If the target is an operational database, the focus is often on maintaining referential integrity and ensuring compatibility with live, transactional systems. For cloud-based destinations, API limits, network bandwidth, and security protocols (e.g., encryption during transfer) must be considered to avoid performance issues or data breaches.

The destination system must also align with the organization’s business objectives. For instance, if the goal is to enable advanced analytics and machine learning, a highly scalable and query-efficient data warehouse may be ideal. For long-term storage of raw, unprocessed data, a data lake could be the better choice.

Different Types of Loading in ETL

The loading process varies depending on the use case, data volume, and the nature of the target system. Businesses typically choose a loading type based on their operational needs and analytical objectives. Let’s explore the main types of data loading in ETL.

| Type | Definition | When to use | Pros | Challenges |

|---|---|---|---|---|

| Full load | Transfers all data from the source system to the destination, overwriting any existing records. | Initial setup of a data warehouse or complete system refreshes. | Ensures that the destination system has an exact replica of the source data. | Resource-intensive for large datasets and can disrupt operations if performed during peak usage. |

| Incremental load | Loads only the data that has changed or been added since the last extraction. | Regular updates where frequent changes occur in the source data. | Faster, minimizes resource usage and reduces system strain. | Requires robust mechanisms to track and capture changes (timestamps or CDC). |

| Real-time load | Continuously loads data into the destination as soon as it becomes available in the source. | Time-sensitive scenarios (IoT monitoring, financial transactions, or live analytics.) | Provides up-to-the-minute data. | Demands high system performance, reliable network connectivity, and strong infrastructure. |

| Batch load | Transfers data in scheduled batches. | Periodic updates. | Predictable system load, suitable for non-urgent data updates. | May delay availability of the latest data, which could impact real-time decision-making. |

Challenges of Loading in Extract, Transform, Load Data

These issues you may face while loading data into your destination system can impact the accuracy, performance, and reliability of the entire ETL process. Let’s look at some common challenges associated with this step:

- Performance bottlenecks. Large volumes of data or complex transformations can slow down the loading process, especially when dealing with real-time or batch loads during peak usage.

- Resource constraints. Loading data into the target system requires significant computational and storage resources. For high-frequency or large-scale updates, this can strain system capacity and lead to disruptions.

- System downtime. Some loading processes, especially full loads, may require taking the target system offline temporarily. This can disrupt operations and impact business continuity.

- Error handling and recovery. Data loading is susceptible to missing records, corrupted files, or interrupted transfers. Recovering from these errors without duplicating data or losing information can be complex and time-consuming.

- Schema compatibility. The target system’s schema must align with the transformed data. Any mismatch in structure or format can lead to failures during the loading process.

- Concurrency management. When multiple processes try to load data simultaneously, it can create conflicts or overwrite issues.

Examples of ETL: How Businesses Use Data Pipelines

ETL stands behind many of the data-driven decisions businesses make every day. This section explores how businesses across various sectors use ETL pipelines to solve real-world challenges.

#1 Example of ETL Process: Parsing Financial Data from PDF

Extracting specific information from PDFs requires an advanced ETL process combining AI-driven tools, scalable infrastructure, and efficient workflows. The process typically involves downloading the files, converting them to text using OCR technologies, and applying AI models to locate and extract relevant data points (for example, turnover values or dates).

At Intsurfing, we’ve handled a similar project, processing 18 thousand PDF files of financial reporting. Using Python-based workflows, we extracted 1.2 million data points while keeping costs as low as 0.6–0.7 cents per 1,000 files. Our AWS-based infrastructure allowed us to complete text conversion and data parsing in just three days.

#2 ETL Process Example: E-commerce Personalization Engine

Personalized product recommendations drive engagement, conversion rates, and customer satisfaction. To power these recommendations, a robust ETL pipeline is essential for consolidating diverse datasets and preparing them for machine learning models that generate real-time suggestions.

Here is how the flow looks like in this extract, transform, load example:

- Extraction. Raw data is collected from web server logs for clickstream data, transactional databases for purchases and cart details, and APIs for product catalogs. These datasets, in SQL tables, JSON, and unstructured logs, are pulled into the pipeline for further processing.

- Transformation. The raw data is cleaned, standardized, and prepared for analysis. Duplicates are removed, missing fields are filled, and timestamps, currencies, and product IDs are unified. Key metrics (purchase frequency and engagement scores) are calculated. Customer profiles are enriched by combining behavioral data with demographics, while product data is enhanced with tags and ratings.

- Loading. Transformed data is loaded into a data warehouse for historical analysis and model training. Real-time interaction data is pushed to Kafka for immediate use in recommendation algorithms.

This ETL-driven personalization engine enables e-commerce platforms to generate contextually relevant recommendations. For instance, when a customer browses a category, the engine suggests products based on co-purchased items or frequently viewed alternatives.

Wrapping It Up

By exploring extraction, transformation, and loading, you’ve taken the first step toward understanding how data pipelines work.

But there’s more to delve into. Learn about designing scalable pipelines that handle increasing data volumes or experiment with real-time data processing for instant insights.

Do you feel like you need assistance? Consult Intsurfing experts to streamline your ETL processes and maximize the value of your data. Keep learning, and start applying ETL to solve real business challenges.