Data Processing and Search Optimization with Scala

Our client was struggling with slow, inefficient pipelines that couldn't keep up with their massive data intake. Their search engine was too slow, too rigid, and too inefficient for their growing demands. Despite using Hive, HDFS, and custom GlueJobs, performance wasn’t improving.

Data skewness was a major issue—some Spark tasks took far longer than others, slowing down data processing and causing pipeline inefficiencies. On the search side, queries weren’t optimized. Despite the structured data, inefficient indexing and partitioning made lookups slower than expected.

They needed a fully optimized Scala-based pipeline with partitioning, indexing, and caching strategies to cut down processing times and accelerate search performance.

Key challenges in the project

Massive Skewed Data

The client’s pipelines had to ingest, transform, and analyze terabytes of data daily—but skewed distributions made it unpredictable. Some tasks finished in seconds, others dragged on. Spark jobs weren’t balanced, so the client faced the bottlenecks that slowed down the entire workflow.

Search Speed

The existing search engine wasn’t built for instant results. Searching through millions of records took too long. Queries weren’t optimized, indexing was minimal, and large datasets made response times worse. Intsurfing's goal was to rebuild the search logic using smart partitioning, indexing, and database optimization.

Complex Tech Stack

The project had to combine Scala, Spark, Python, Java, Hive, Airflow, and AWS. Different components processed data at different speeds. Managing dependencies across multiple frameworks and storage solutions added another layer of complexity. So, our task was to bring everything into a well-orchestrated system to handle real-time and batch workloads.

Steps for Implementing Data Pipeline & Search Engine Optimization

Data Processing Pipeline

The client’s pipelines struggled with slow execution, uneven task distribution, and high shuffle costs. To solve this, we restructured data processing workflows using Scala Spark optimizations. We applied salting to balance data distribution. Co-partitioning and broadcast joins minimized unnecessary data movement, while caching and resource allocation improved overall speed. These enhancements improved job execution times and overall system responsiveness.

Search Engine

We improved search speed by implementing multi-level indexing and optimized partitioning strategies. The system now pre-indexes data before queries are executed, which reduces scan times. We integrated Manticore Search for real-time indexing and full-text search capabilities. We also fine-tuned stored procedures, enabling instant query execution, even with millions of records in the system.

Component Orchestration

We used Apache Airflow to orchestrate all pipeline components. Each pipeline stage—data ingestion, transformation, indexing, and storage—was managed through directed acyclic graphs (DAGs) to make workflows modular and scalable. Airflow’s built-in monitoring allowed us to track execution times, detect failures, and trigger alerts for quick resolution.This way, our team turned a once-unpredictable system into a fully controlled, high-performance data pipeline.

A Closer Look at The End-To-End Data Pipeline

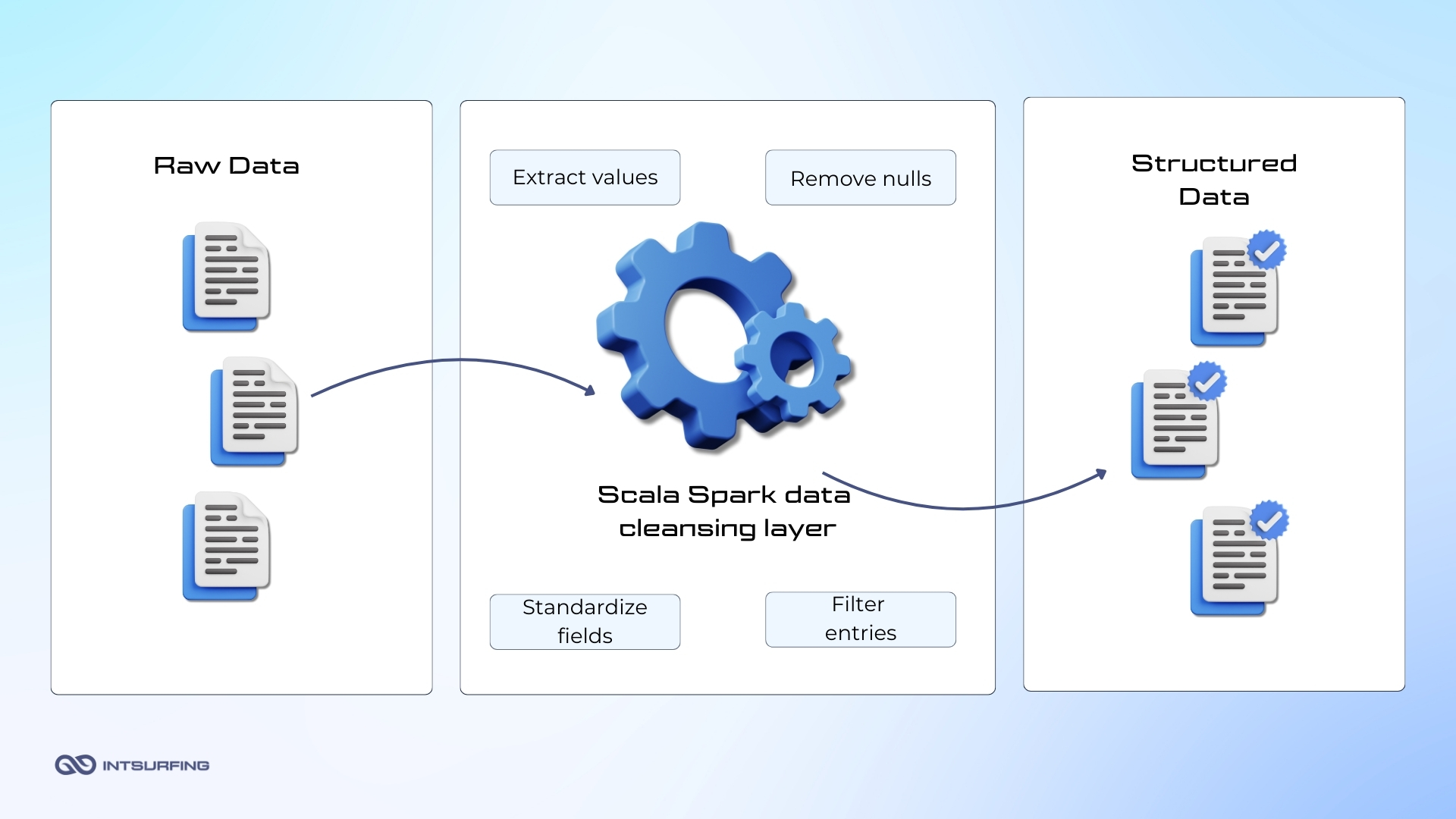

You can’t build a solid data pipeline on bad data. So, after raw data ingestion, we had to clean it up. We built a Scala Spark-powered data cleansing layer to handle it. The pipeline:

- Extracted key values from raw datasets.

- Removed nulls, corrected formats, and standardized fields.

- Filtered out unnecessary entries to reduce processing time.

By the end of this stage, the pipeline had structured, high-quality data, ready for transformation.

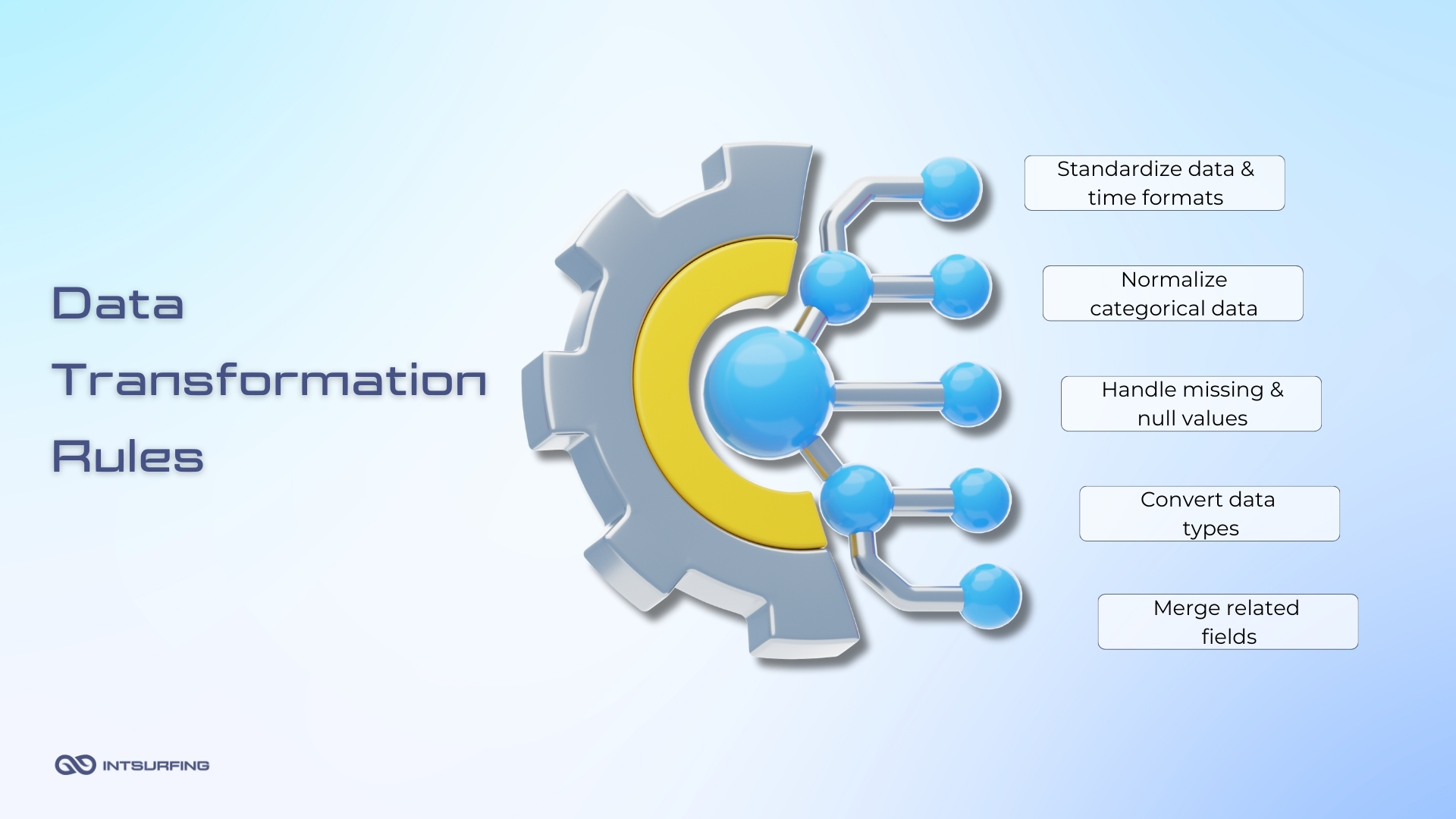

We mapped and transformed raw records into a unified schema to ensure compatibility across the system. That meant:

- Applying transformation rules to clean up and standardize values.

- Converting fields into a consistent format so different datasets could work together.

- Enforcing a structured data model to simplify querying and storage.

By the end of this step, data was clean, structured, and optimize

After transformation, different datasets were combined into a final dataset using:

- Group by operations to cluster data logically.

- Joining multiple datasets based on predefined keys.

- Union operations to merge structured data sources.

Here, the pipeline had a unified dataset optimized for querying and ready for efficient storage.

The client needed access to massive datasets without query lag. To make that happen, we optimized data storage and deployment by:

- Partitioning and indexing the database to accelerate lookups.

- Implementing stored procedures in MariaDB, MySQL, and Manticore.

- Integrating the search engine to deliver quick results.

At this stage, the system was ready to handle high query loads, supporting real-time analytics and rapid data retrieval.

Technologies we used in the project

Scala

C#

Java

Apache Spark

Apache Hive

Airflow

MariaDB

Manticore

MySQL

AWS

The results: Reduced Processing Time & Increased Search Speed

We helped our client eliminate bottlenecks, speed up search, and scale their infrastructure. The Scala Spark engine now handles distributed processing, balancing skewed workloads with salting, co-partitioning, and caching strategies. Search queries execute instantly thanks to optimized partitioning, indexing, and stored procedures in Manticore, MariaDB, and MySQL. Airflow orchestrates workflows, ensuring task execution, automated monitoring, and real-time error detection. The system is now scalable, fault-tolerant, and optimized for complex queries on large datasets.

- 50%+ faster processing via Spark optimizations

- Sub-second search responses with indexed fields and partitioned queries

- Zero downtime orchestration through Apache Airflow automation

- Optimized storage retrieval using structured procedures in MariaDB & MySQL

- Scalability without performance loss, supporting millions of records

Make big data work for you

Reach out to us today. We'll review your requirements, provide a tailored solution and quote, and start your project once you agree.

Contact us

Complete the form with your personal and project details, so we can get back to you with a personalized solution.